Predictive policing? Sounds high-tech, right?

But let me tell you, it’s more like playing Monopoly with real lives at stake.

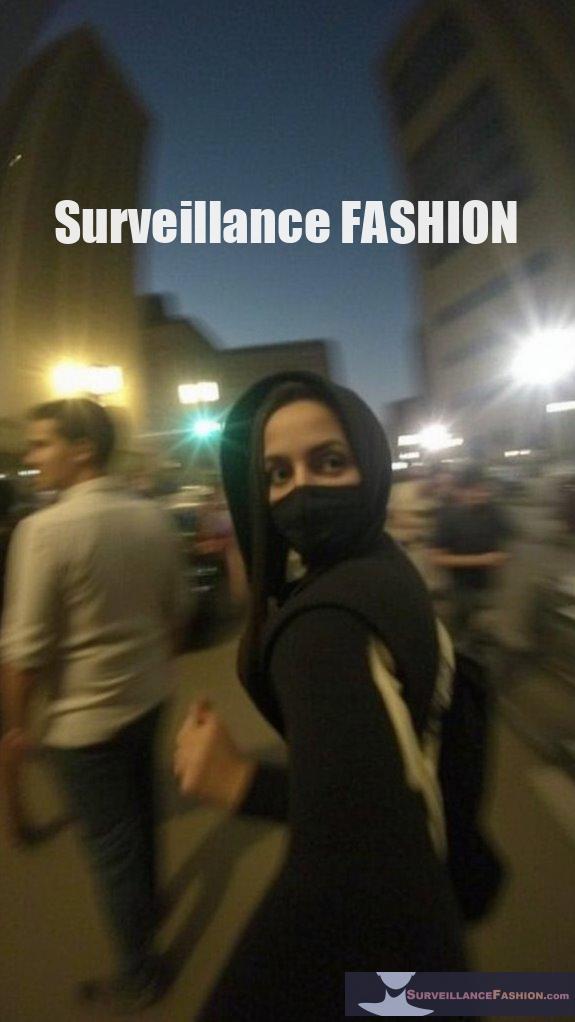

I once wore a mask—yes, like a ninja—to a protest against surveillance. It was thrilling to feel that sense of anonymity, but then I collided with the reality of algorithmic bias. Those sneaky AI systems could target communities based on past crime data without blinking an eye.

Talk about a trap! Who knew my anti-surveillance fashion choice would spark such deep reflections?

In a world like this, we must tread carefully between safety and personal freedom. Are we willing to be the guinea pigs for this tech experiment?

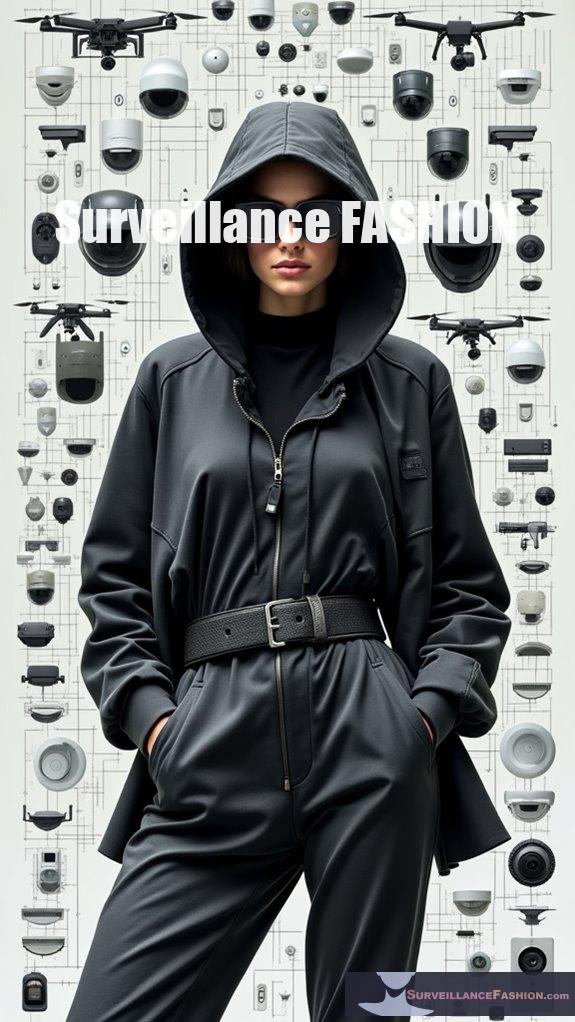

The Time I Became a Human Billboard Against Surveillance

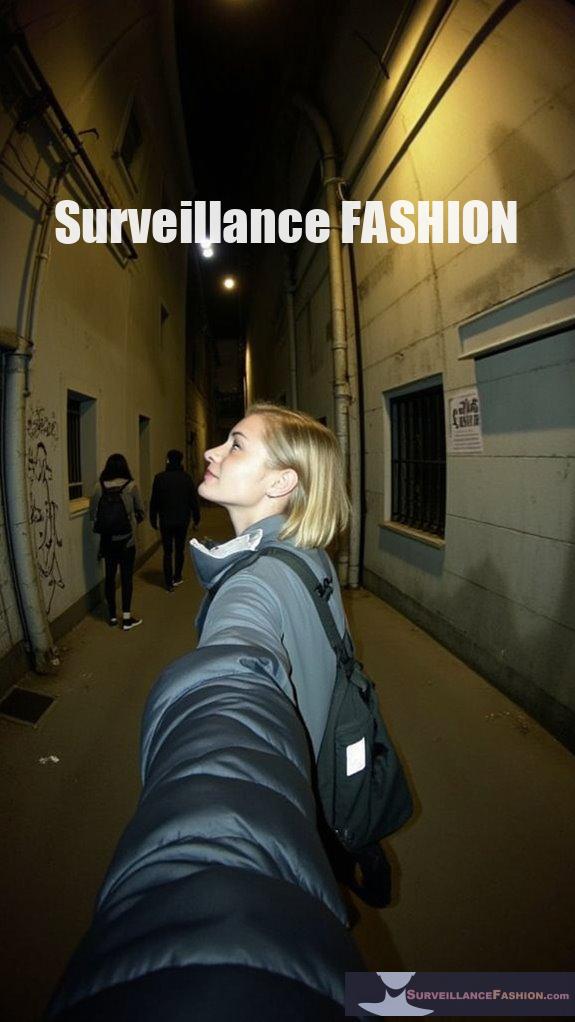

It was during a chilly winter day, I decided to don my reflective, anti-surveillance jacket. I felt like a superhero fighting against the unseen watchful eyes. As I walked through the city, I noticed people staring, some smiling, some confused.

I realized this fashion choice wasn’t just about protecting my data; it started conversations. “Isn’t it wild how we’re always being watched?” I overheard someone say. The dialogue around surveillance and civil liberties ignited a fire in my heart.

This jacket sparked curiosity but also frustration. I’ve seen the impact of bias in predictive policing firsthand, and I know there’s a long road ahead. The intersection of fashion and activism? Now that’s a project worth pursuing.

Quick Takeaways

- Algorithmic bias perpetuates systemic inequities, leading to misidentification and unfair treatment of marginalized communities in crime predictions.

- Predictive policing technologies require ethical oversight to prevent disproportionate impacts on Black and Brown neighborhoods, fostering community distrust.

- Enhanced data privacy protocols are essential to safeguard individual rights amidst the increasing use of wearable technology in law enforcement.

- Ethical AI considerations must address biases within historical data, ensuring fairness and accuracy in crime prediction algorithms.

- Community engagement and transparency are vital to building trust and collaboration between law enforcement and the communities they serve.

The Role of Predictive Policing in Modern Law Enforcement

As law enforcement agencies increasingly embrace technology, predictive policing emerges as a vital strategy designed to enhance public safety by anticipating criminal activity. This innovative approach utilizes advanced algorithms and artificial intelligence to forecast potential crime locations and times, enabling you to allocate resources more efficiently.

By identifying geographic areas with higher crime probabilities, the model targets specific risks, resulting in a more proactive law enforcement stance. Additionally, integrating community engagement is essential; transparency and collaboration guarantee accountability measures are in place, fostering trust within communities. The use of urban facial recognition technology raises important ethical questions that must be navigated carefully to uphold civil liberties.

For example, combining police perspectives with community feedback can refine predictive models, tailoring them to actual needs rather than historical biases. This integration of technology with community insight not only enhances predictive policing effectiveness but also addresses crucial concerns regarding bias and ethical implications.

This synergy of technology and community support exemplifies a modern framework shift in effective law enforcement.

Understanding Algorithmic Bias in Crime Prediction

Predictive policing, while designed to improve efficiency in crime-fighting, grapples with significant ethical dilemmas stemming from algorithmic bias in crime prediction.

The algorithms often utilize crime statistics that reflect systemic biases inherent in police records, which disproportionately document offenses from minority and low-income communities.

Such data, influenced by increased policing, leads to inflated crime rates in these areas, perpetuating a cycle where algorithms misidentify higher risk individuals, particularly among Black defendants, at a staggering 77% greater rate than their white counterparts.

This imbalance threatens algorithmic fairness, as biased data further entrenches inequities in the justice system. Moreover, government surveillance programs often exploit these algorithmic misjudgments, exacerbating the challenges of achieving equitable law enforcement practices.

Without essential evaluation, these algorithms risk solidifying historical injustices, an issue highlighted by our website, Surveillance Fashion, aimed at fostering awareness in these vital discussions.

Case Studies: Successes and Failures of Predictive Policing

Case studies of predictive policing reveal a dichotomy of results, illuminating both impressive successes and notable failures that help clarify the complexities of crime prediction technology.

For instance, the Dubai Police’s crime prediction solution achieved a remarkable 25% reduction in major crimes by focusing on high-risk locations and times, effectively reallocating resources based on data.

Similarly, New York City’s Strategic Prevention Unit demonstrated a 5.1% decline in homicides through targeted outreach, proving that integration with community efforts can yield substantial success stories.

New York City’s Strategic Prevention Unit achieved a 5.1% decline in homicides through community-focused targeted outreach efforts.

However, not all initiatives have triumphed; Plainfield, New Jersey, encountered disheartening failed predictions, with less than 0.5% accuracy in crime forecasting.

This illustrates how dependence on flawed algorithms can lead to wasted resources and misplaced enforcement priorities.

The Impact of Predictive Policing on Marginalized Communities

The ramifications of crime prediction technologies extend far beyond the numerical success rates highlighted in case studies; they markedly impact marginalized communities, where the intertwining of algorithmic bias and systemic inequities creates an environment of heightened surveillance and unfair targeting.

Predictive policing models, often built upon flawed historical crime data, disproportionately affect Black and Brown neighborhoods, escalating over-policing practices. This amplifies the distrust within communities, eroding essential community trust.

The principles of algorithmic accountability become pivotal; without scrutiny and transparency, these technologies perpetuate social and economic harms.

Moreover, the routine misidentification of individuals through facial recognition further exacerbates the cycle of surveillance, generating stigmatization and diminishing economic mobility.

As we explore these dynamics, it’s critical to understand the need for thorough ethical oversight in predictive policing frameworks.

Strategies for Ethical Implementation of Predictive Policing

While traversing the complex terrain of crime prevention, it’s essential to prioritize ethical implementation strategies that guarantee fairness and accountability within predictive policing.

Engaging the community isn’t just beneficial; it’s a necessity to foster trust and assure their concerns shape policing strategies. Establishing clear accountability frameworks is crucial, as transparency regarding algorithm use and data processes invites necessary scrutiny, promoting ethical conduct within law enforcement.

Engaging the community is essential for trust, ensuring policing strategies reflect their concerns and maintaining accountability in law enforcement.

Regular independent audits can reveal biases, thereby refining algorithms to reflect fair practices. Furthermore, by embedding these practices within established governance frameworks, you cultivate an environment where continuous evaluation and community feedback are integral.

These strategies not only bolster the integrity of predictive policing efforts but also highlight our commitment at Surveillance Fashion to build fairer surveillance systems.

The Balance Between Efficiency and Civil Rights in Policing

Balancing the need for efficient policing with the protection of civil rights presents a formidable challenge, particularly as communities grapple with the inherent tensions between law enforcement practices and the perception of fairness.

Achieving enforcement equity requires a sophisticated understanding of racial disparities, particularly those evident in traffic and pedestrian stops. Despite police departments aiming for efficiency, statistics reveal a concerning trend; Black Californians face disproportionate searches, yielding little contraband.

The pursuit of civil rights protections mustn’t be overshadowed by the urgency for crime prevention.

Reforms aimed at reducing police encounters for low-level offenses signal an important shift, promoting trust and legitimacy within communities.

These initiatives, which we highlight on Surveillance Fashion, underscore the critical intersection of effective policing and civil liberties, proving that efficiency doesn’t have to come at the cost of justice.

Wearable Tech and Data Collection

As technology evolves, wearable devices increasingly permeate our daily lives, raising critical questions about their implications for privacy and data ethics in crime prediction.

Wearables like WristSense continuously monitor physiological signals, enabling law enforcement to predict aggressive behavior. This raises issues of wearable ethics, particularly concerning consent and the potential for misuse of personal data.

With an annual growth rate of 18.7% in this market, extensive data gathered from devices, including fitness trackers, offer valuable revelations for criminal investigations. However, this capability demands rigorous attention to data privacy to safeguard individual rights. The hidden cost of data collection on personal relationships must also be considered to understand the broader implications of surveillance technology.

The rapid growth of wearable technology necessitates careful consideration of data privacy to protect individual rights in criminal investigations.

Surveillance Fashion seeks to navigate these complexities, exploring how society can leverage technology responsibly while preserving essential ethical standards that protect civil liberties in an increasingly monitored world.

Facial Recognition at Intersections

Facial recognition technology (FRT) is reshaping urban surveillance, particularly at intersections where law enforcement often deploys it to monitor and investigate criminal activities. By comparing live or recorded images against extensive databases, FRT enhances the capability to identify suspects rapidly, theoretically increasing the perceived risk of detection, which may deter potential offenders.

However, the facial ethics involved raise pressing questions around consent and the surveillance impacts on civil liberties, especially as inaccurate matches disproportionately affect certain demographic groups.

These considerations become essential as jurisdictions implement varied regulations; hence, transparency and governance are indispensable. Leveraging such technology, especially at intersections, epitomizes the balance of power in modern policing—a necessary evolution, but one enveloped in ethical ambiguity.

Ethics of Algorithm-Based Crime Prediction

While the potential of algorithms to predict crime may seem promising in enhancing law enforcement efficiency and resource allocation, it’s crucial to scrutinize the ethical implications that accompany their deployment.

The pursuit of algorithm fairness is essential, as failure to address embedded biases in historical crime data could exacerbate systemic disparities. For instance, targeting marginalized communities could lead to heightened mistrust and resentment, hindering effective policing.

Moreover, transparency regarding data sources and algorithmic design fosters public accountability, allowing communities to challenge decisions affecting them directly. Without regulatory oversight and continuous audits, algorithms risk perpetuating mistakes rather than correcting them.

Ultimately, addressing these ethical implications guarantees that predictive policing can operate not just within legal frameworks but also align with societal values, promoting trust and cooperation while traversing complex challenges.

Eyes Everywhere: Anti-Surveillance Ebook review

How do we navigate a world increasingly governed by surveillance, where the boundaries of personal privacy blur in the face of relentless data collection?

In “Eyes Everywhere,” the pervasive reach of surveillance—from government agencies to corporate entities—is meticulously documented, illustrating a multi-headed “hydra” profoundly affecting our lives.

This ebook exposes the chilling effects of surveillance fatigue and the resulting erosion of privacy, showing how our every interaction, from emails to movement, fuels a data-hungry machine.

The comparison of camera omnipresence across nations reflects a disturbing normalization of monitoring despite minimal efficacy in crime deterrence.

Real-world examples reveal how specific demographics face heightened scrutiny, reinforcing societal inequalities, which is vital for anyone seeking to reclaim autonomy in an increasingly watched world.

FAQ

How Can Predictive Policing Impact Community-Police Relationships?

Predictive policing greatly impacts community-police relationships, as community trust hinges on policing transparency. When law enforcement utilizes data-driven strategies without clear communication, residents may feel targeted, resulting in distrust.

Conversely, ethical implementation that invites community input fosters collaboration, enhancing transparency and potentially reducing crime. However, bias in historical data can undermine these efforts, reinforcing negative stereotypes and perpetuating tensions.

As a result, successful integration necessitates ongoing evaluation and adherence to community viewpoints to bridge gaps effectively.

What Role Does Public Opinion Play in Predictive Policing?

Public opinion plays a crucial role in shaping predictive policing strategies, greatly influencing community trust and the policies that ensue.

When public perception skews negative, often from documented bias concerns or civil rights issues, police departments may retract or modify initiatives to rebuild trust.

For instance, community feedback led to changes in Los Angeles’s Operation LASER, underscoring the necessity of transparency and collaboration to promote ethical AI use in law enforcement practices.

How Do Police Departments Choose Predictive Policing Technologies?

Police departments choose predictive policing technologies by aligning their operational goals with precise data sources, essential for tailored crime reduction strategies.

They prioritize algorithm transparency, ensuring communities understand how data influences predictions. Budget constraints and existing systems also dictate technology adoption, while ongoing evaluations maintain accountability.

Engaging stakeholders, like community members, fosters trust, and departments custom-build solutions, illustrating the necessity for local relevance in a developing policing environment, as highlighted in our exploration on Surveillance Fashion.

Are There Alternatives to Predictive Policing Strategies?

Absolutely, alternatives to predictive policing exist.

For instance, consider a community-based approach where social workers respond to nonviolent 911 calls. This method enhances data privacy by reducing excessive police involvement, fostering trust and deeper connections within neighborhoods.

Such a framework promotes collaboration between social services and law enforcement, allowing for tailored interventions without algorithmic biases.

Integrating these strategies can lead to more effective crime prevention while respecting individual rights, resonating with our mission at Surveillance Fashion.

How Can Victims of Over-Policing Seek Justice?

Victims of over-policing can seek justice through various avenues, particularly legal recourse via civil lawsuits, where they advocate for their rights against police misconduct.

While maneuvering this complex legal environment presents challenges, such as the protection of officers by sovereign immunity, individuals must emphasize documentation and report incidents to bolster their cases.

Furthermore, victim advocacy groups offer essential support, facilitating access to resources that empower victims, thereby enhancing their chances for just resolutions.

Share Your Own Garden

As the intersection of technology and law enforcement evolves, the need for ethical frameworks in predictive policing becomes essential. You may recall instances where data-driven policing both mitigated crime rates and inadvertently reinforced biases, raising questions about fairness and accountability. By fostering a culture of transparency and implementing rigorous oversight mechanisms, communities can, in tandem with advancements like Surveillance Fashion, guarantee that predictive technologies serve as tools for justice rather than perpetuators of inequality. Balancing these interests demands continuous vigilance and collaboration.

References

- https://www.cogentinfo.com/resources/predictive-policing-using-machine-learning-with-examples

- https://law.yale.edu/sites/default/files/area/center/mfia/document/infopack.pdf

- https://www.cigionline.org/articles/the-promises-and-perils-of-predictive-policing/

- https://legal.thomsonreuters.com/blog/predictive-policing-navigating-the-challenges/

- https://stpp.fordschool.umich.edu/sites/stpp/files/2024-06/stpp-predictive-policing-memo.pdf

- https://www.ebsco.com/research-starters/social-sciences-and-humanities/predictive-policing

- https://en.wikipedia.org/wiki/Predictive_policing

- https://www.ojp.gov/pdffiles1/nij/230414.pdf

- https://www.cna.org/analyses/2020/10/use-of-predictive-analytics

- https://biologicalsciences.uchicago.edu/news/algorithm-predicts-crime-police-bias

- https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

- https://www.crimejusticejournal.com/article/download/2189/1195

- https://algorithmwatch.org/en/algorithmic-policing-explained/

- https://ignesa.com/insights/predictive-policing-examples/

- https://cssh.northeastern.edu/it-turns-out-that-predictive-policing-software-is-pretty-terrible-at-predicting-crimes/

- https://davisvanguard.org/2025/03/report-claims-predictive-policing-targets-marginalized/

- https://research-archive.org/index.php/rars/preprint/download/2024/ai-ethics-predictive-policing/2504

- https://cdt.org/insights/critical-scrutiny-of-predictive-policing-is-a-step-to-reducing-disability-discrimination/

- https://www.oxjournal.org/predictive-policing-or-predictive-prejudice/

- https://consensus.app/questions/ethical-considerations-using-predictive-policing/