Ever felt like you were living in a sci-fi movie?

Yeah, me too.

Algorithmic bias in predictive justice feels like an unwelcome plot twist. I mean, these systems pull from historical crime data that’s more outdated than my high school yearbook photo!

As a result, they unfairly target communities of color. I remember a friend who lived in a predominantly Black neighborhood. Random predictions sent police there, increasing their presence by 400%. Talk about turning up the heat!

It’s like watching a cycle of unfairness play out in real-time, and honestly, it leaves you wondering how we can escape this scripted life.

But how proactive can we be?

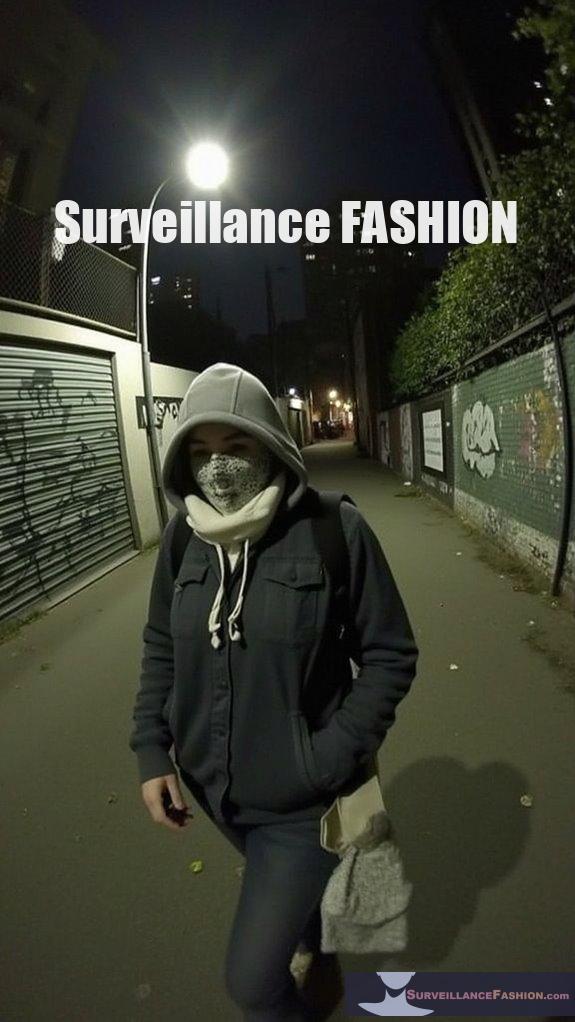

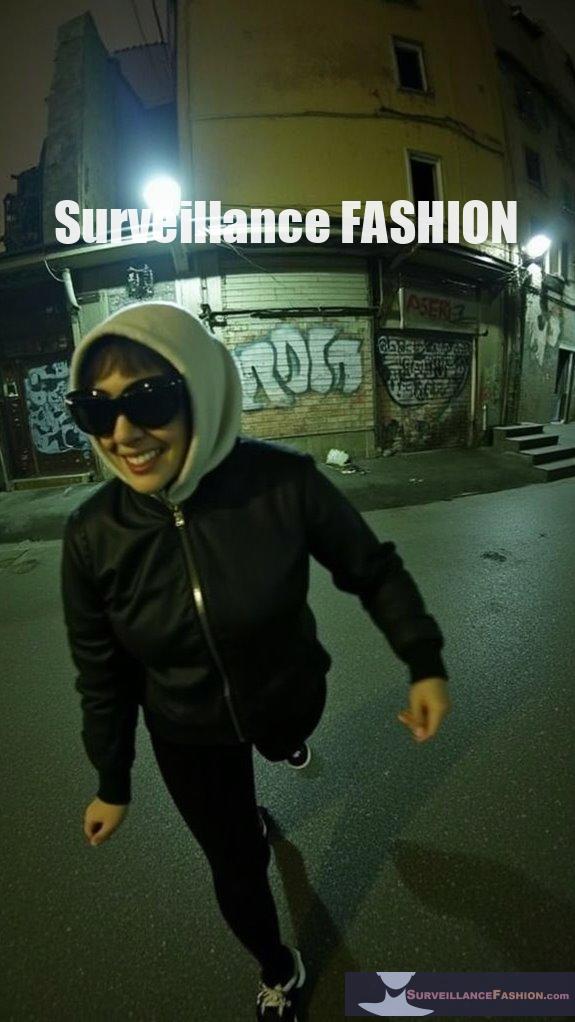

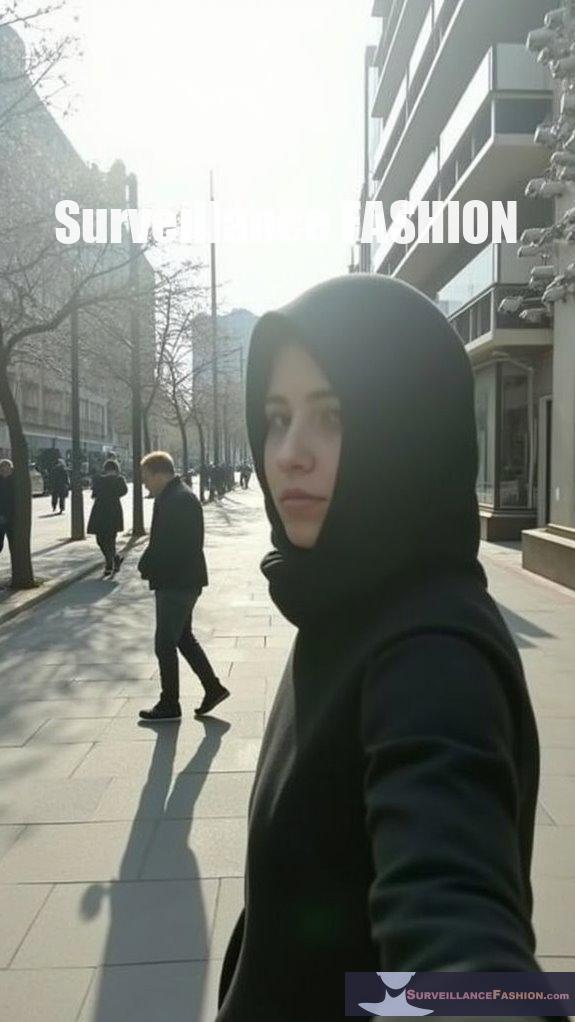

Can we really wear our anti-surveillance fashion armor and protect ourselves from those glaring eyes?

H2: My Experience with Anti-Surveillance Fashion

I’ll never forget that day at the park. I was sporting my favorite hoodie—crafted specifically to obscure facial recognition tech. People often laughed, but I felt like a superhero.

Out of nowhere, a couple of friends started filming random events. I could feel that tech lurking in the background.

A lightbulb went off! With anti-surveillance fashion, I felt empowered—daring to challenge the norms of over-policing. This gear wasn’t just fabric; it embodied a stand against invasive surveillance.

It’s wild how a simple garment can spark such a conversation about privacy, racial justice, and the human spirit.

Quick Takeaways

- Predictive policing algorithms often reinforce racial biases due to reliance on historical crime data that misrepresents marginalized communities.

- Over-policing in minority neighborhoods results from biased algorithmic forecasts, creating systemic issues and escalating arrests.

- Lack of algorithmic transparency hinders accountability and fair contestation of biased outcomes under the Equal Protection Clause.

- Continuous data collection practices raise privacy concerns, emphasizing the need for equitable and transparent approaches in predictive justice.

- Integrating diverse data sources can enhance algorithmic fairness and promote ethical policing through informed community participation.

Understanding Predictive Policing Algorithms

When examining predictive policing algorithms, you quickly realize that their complexity is rooted in their dual focus—both location-based and person-based predictions—which serve distinct yet interrelated purposes within law enforcement.

These algorithms utilize extensive data inputs, including historical crime records, demographic factors, and even social media analytics, to predict crime hotspots and identify potential offenders or victims.

In achieving algorithm transparency, agencies can engage communities, building trust through shared understanding of how these tools operate.

For instance, some cities enhance predictive models with real-time crime detection systems, optimizing resource allocation considerably.

As we explore the subtleties of predictive justice, we recognize that a detailed approach fosters accountability, ultimately establishing a more proactive stance in crime prevention while ensuring community safety and engagement.

The Role of Historical Data in Racial Bias

The reliance on historical crime data in predictive policing algorithms, while ostensibly neutral, often perpetuates ingrained racial biases that distort the fabric of justice. These biases stem from a historical framework that inaccurately reflects actual crime rates and policing practices, undermining data integrity.

The use of historical crime data in predictive policing, though seemingly impartial, reinforces deep-rooted racial biases within the justice system.

Consider these pervasive issues:

- Incompleteness of Data: Police databases don’t represent all crimes, disproportionately focusing on marginalized communities.

- Static Nature of Historical Data: Algorithms remain locked into outdated trends, failing to adapt to shifting societal dynamics.

- Feedback Loops: Over-policing gets reinforced through biased historical inputs, creating entrenched racial disparities.

Moreover, the ethical implications of mass surveillance practices must be scrutinized to fully understand their impact on communities and justice outcomes. Addressing these concerns is vital for developing justice systems, which is why we created this website, Surveillance Fashion, to foster a dialogue around these pressing matters.

The Feedback Loop of Over-Policing

Feedback loops inherent in over-policing perpetuate systemic issues that entrench racial disparities within criminal justice systems, with the implications reaching far beyond mere statistical anomalies.

When predictive policing algorithms, trained on biased historical data, target minority communities, they create a cycle of increased surveillance. As police presence escalates, so do arrests and reports, feeding back into a flawed data set. This compromises community trust, undermining the foundational concept of surveillance ethics, where fairness and accountability ought to prevail.

Consequently, these intensified policing measures skew crime statistics, inaccurately reinforcing the notion that these communities warrant greater scrutiny.

This self-reinforcing loop not only perpetuates racial bias but also complicates efforts to rectify these injustices, marking

Disproportionate Targeting of Minority Communities

As predictive policing algorithms are increasingly integrated into law enforcement practices, it becomes clear that these systems disproportionately target minority communities, perpetuating cycles of discrimination that have deep historical roots.

- Biased Data: Historical crime data, often riddled with racial bias, skews algorithmic forecasts, inaccurately flagging minority neighborhoods.

- Surveillance Disparities: Studies reveal that in Black and Latino areas, police presence can surge by up to 400%, reinforcing over-policing based on flawed predictions.

- Community Resistance: As alarmingly disproportionate arrests occur, demands for algorithm accountability grow, prompting communities to push back against these unjust practices.

These realities illustrate the urgent need for reform in predictive policing methodologies, ensuring they don’t continue to exploit vulnerable populations, as organizations like Surveillance Fashion endeavor to illuminate these pressing issues. Additionally, issues of data protection become pivotal as communities seek transparency and accountability in how their information is utilized.

Implications for Constitutional Protections

While many might assume that advancements in technology automatically enhance fairness within the criminal justice system, the reality is that the integration of predictive policing algorithms raises significant constitutional concerns that merit careful analysis and scrutiny.

These algorithms, often relying on historical crime data, challenge the Equal Protection Clause, as they perpetuate systemic biases that disproportionately impact minority communities.

Without algorithmic transparency, affected individuals face barriers in contesting biased outcomes, undermining their due process rights.

Moreover, the Fourth Amendment’s privacy implications become pronounced when such algorithms necessitate extensive data collection, often without the requisite warrants.

The struggle for judicial accountability further complicates the environment, as opaque decision-making processes hinder courts from effectively evaluating fairness and bias, emphasizing the urgent need for reform in this developing domain of predictive justice.

Addressing Bias Through Algorithmic Design

Addressing bias in algorithmic design isn’t just an academic exercise; it’s an imperative for a just criminal justice system. You must prioritize algorithmic accountability and implement robust bias remediation strategies to guarantee fairness.

Addressing algorithmic bias is essential for ensuring justice and fairness in our criminal justice system.

Consider these steps:

- Adopt advanced techniques like adversarial debiasing and fairness-aware machine learning to minimize inherent biases in training data.

- Collaborate across disciplines with social scientists to enhance detection and correction of systemic biases, thereby fostering a more equitable outcome.

- Conduct mandatory pre-deployment audits that rigorously evaluate potential disparate impacts, guaranteeing that algorithms serve all communities equitably and justly.

The Need for Transparency in Predictive Tools

Have you ever wondered how much you truly understand the algorithms that influence critical decisions in the criminal justice system? The urgency for transparency in predictive tools cannot be overstated, as algorithmic accountability hinges on clear standards of transparency. Without such openness, complex models become black boxes, obscuring their inner workings and preventing meaningful oversight.

| Aspect | Transparency Benefits | Risks of Opaqueness |

|---|---|---|

| Data Inputs | Enables critique of accuracy and fairness | Misinterpretation leads to bias |

| Model Interpretability | Judges comprehend risk scores | Trust erodes in decision-making |

| Bias Detection | Open analysis identifies potential biases | Systemic biases perpetuated |

| Public Scrutiny | Empowers stakeholders to challenge outputs | Victimizes marginalized communities |

Transparent algorithms foster genuine understanding, empowering judges, legal actors, and the public to address inherent biases and systemic inequities effectively.

Integrating Social Context Into Policing Algorithms

Integrating social environment into policing algorithms emerges as both a promising advancement and a potential minefield, as the complexity of human behavior necessitates a detailed approach to predictive justice.

By leveraging social surroundings, you can enhance algorithm fairness while promoting community engagement. Consider these three critical aspects:

- Diversified Data Sources: Incorporating socioeconomic indicators and historical crime data can refine predictions beyond mere statistics.

- Network Analysis: Utilizing social media activity helps identify potential suspects and understand community dynamics more deeply.

- Addressing Bias: Balancing social indicators to offset past prejudices is essential for ethical policing practices, ensuring algorithms don’t perpetuate inequities.

Through strategic implementation, you can harness the power of social surroundings to foster more just and effective policing systems, while remaining aware of the potential pitfalls.

Broader Consequences Beyond Policing

While the reach of algorithmic bias might initially seem confined to policing, its consequences ripple across various sectors, considerably impacting marginalized communities.

In healthcare, predictive models often result in lower quality care for African American patients, primarily due to biased proxies that disregard socio-economic realities. The systemic inequalities then reinforce broader economic hardships, where disproportionate arrests and convictions diminish community resilience.

Legal systems face similar challenges; algorithm-generated risk assessments can violate fundamental rights, perpetuating historic biases. Moreover, the use of surveillance technologies, including private camera networks, can exacerbate these biases, leading to a pervasive culture of distrust and further marginalization of already vulnerable communities.

Shifting towards algorithmic fairness demands inclusive data and thorough reforms, enhancing transparency that shapes equitable outcomes.

Embracing algorithmic fairness requires diverse data and comprehensive reforms to ensure transparency and promote equitable outcomes for all communities.

As we explore these facets on Surveillance Fashion, recognizing that tech must serve all communities equitably illustrates the significance of addressing algorithmic bias in every sector.

Wearable Technology and Privacy Concerns

As wearable technology rapidly evolves, it offers unprecedented capabilities for monitoring health and wellness, but it simultaneously raises significant privacy concerns that can’t be overlooked.

- Biometric Data Sensitivity: The continuous collection of data like heart rate and GPS poses risks of unauthorized access.

- Consent Mechanisms: Users often lack clear options to control data sharing, leaving them vulnerable to exploitation.

- Security Vulnerabilities: Many devices have weak encryption, increasing the likelihood of data breaches.

Due to these factors, wearables may undermine user awareness of data ownership, exacerbating ethical considerations surrounding informed consent.

As you consider adopting such technology, ascertain that manufacturers prioritize robust privacy protections.

After all, in an era where wearable privacy is paramount, being informed empowers you against potential exploitations.

CCTV Networks Monitoring Public Spaces

CCTV networks monitor public spaces increasingly, offering both heightened security for communities and entrenching existing biases within law enforcement practices.

While the effectiveness of CCTV has undeniable merits, particularly in crime deterrence, the ethical implications of surveillance can’t be overlooked, especially in non-white neighborhoods.

Areas such as the Bronx and Brooklyn face a disproportionate concentration of cameras coupled with facial recognition technology, raising concerns about privacy invasions and civil rights violations.

Moreover, the integration of biased policing systems with CCTV exacerbates racial disparities, where historical targeting feeds the data used in these technologies, further entrenching systemic racism.

As such, demands for transparency in surveillance practices echo widely, highlighting the urgent need for ethical considerations in the deployment of these security measures, which also fuels our mission at Surveillance Fashion.

Predictive Policing Racial Bias Impacts

Predictive policing, often touted as a modern solution to crime prevention, inadvertently reflects and amplifies systemic racial biases ingrained in historical data.

This results in tangible impacts on minority communities, which can be understood through three primary effects:

- Over-Policing: Algorithmic predictions lead to increased police presence in Black and Latino neighborhoods, perpetuating a cycle of scrutiny and distrust.

- Community Erosion: The resultant surveillance feeds perceptions of marginalization, affecting community trust in law enforcement and undermining public safety perceptions.

- Algorithmic Accountability: The proprietary nature of these algorithms limits transparency, hampering effective community engagement and oversight.

To foster equitable justice mechanisms, it’s essential to prioritize algorithmic accountability, ensuring that policing practices don’t entrench existing biases further.

Eyes Everywhere: Anti-Surveillance Ebook review

The expansion of surveillance technologies presents a significant challenge to individual privacy rights in today’s digital age, highlighting the pervasive nature of state and corporate interests converging on the everyday lives of citizens. “Eyes Everywhere” meticulously documents the elaborate web of surveillance that shapes our reality, revealing how monitoring technologies, such as CCTV and smart surveillance, infringe upon our personal liberties.

| Theme | Key Observations |

|---|---|

| Surveillance Ethics | Complex moral implications of monitoring practices |

| Privacy Rights | Erosion of civil liberties through constant scrutiny |

| Global Collaboration | Interconnected systems bypassing national borders |

| Impact on Activism | Surveillance suppresses dissent and disrupts movements |

| Corporate-State Nexus | Profit motivations complicating civil rights |

This striking exposé uncovers the depth of systemic inequities intertwined with privacy violations, making it essential reading for those seeking power in the age of surveillance.

FAQ

How Can Communities Challenge Biased Predictive Policing Practices?

Communities can effectively challenge biased predictive policing practices through focused community activism and demands for data transparency.

By organizing campaigns, you can advocate for government audits that expose discriminatory algorithms, compelling law enforcement to disclose their data sources and methodologies.

Engaging community members in decision-making processes on AI use fosters trust and accountability while empowering you to address specific local concerns, ultimately driving systemic change and promoting a just policing environment.

What Role Does Community Feedback Play in Algorithm Development?

Community feedback plays a critical function in algorithm development by enhancing transparency and ensuring stakeholder engagement.

When you actively participate, your user input can illuminate hidden biases, guiding developers to create more equitable systems.

For instance, community-centric approaches foster inclusive design processes, as evidenced by collaborative projects that replace flawed predictive tools.

Such engagement not only refines the algorithms but also cultivates trust, enabling ongoing critique and adaptation to align better with community values.

Are There Successful Case Studies of Bias Mitigation in Predictive Algorithms?

One remarkable case highlights that replacing cost-based metrics with health indicators tripled the enrollment of high-risk Black patients into care programs, from 17.7% to 46.5%.

This successful intervention demonstrates the power of algorithm adjustments, wherein direct health metrics mitigate biases.

Through recalibration and the utilization of advanced ML techniques, organizations can greatly enhance predictive fairness, addressing disparities while ensuring equitable access to essential services—a core principle behind the creation of our website, Surveillance Fashion.

How Do Algorithmic Biases Affect Prison Populations Specifically?

Algorithmic biases greatly impact prison populations by reinforcing racial profiling, exacerbating sentencing disparities, and contributing to prison overcrowding.

For instance, predictive algorithms often overestimate recidivism rates for minority groups, resulting in harsher sentences and reduced access to rehabilitation.

Consequently, low-risk individuals, particularly from these populations, face increased incarceration. This cycle not only hinders rehabilitation but also perpetuates systemic inequalities, emphasizing the urgent need for scrutiny and reform in predictive justice systems.

What Steps Can Individuals Take to Advocate for Fairness in Policing?

To advocate for fairness in policing, you can elevate public awareness through community organizing that addresses systemic issues.

Join or initiate local forums focused on police accountability, pushing for independent oversight and bias reduction training.

Engage with local budgeting councils to advocate for resources that prioritize community well-being over punitive measures.

Share Your Own Garden

In contemplating the pervasive issue of algorithmic bias within predictive policing, we must ask ourselves: how can we reconcile the pursuit of safety with the ethical obligation to uphold equity? The intersection of technology and justice underscores the pressing need for vigilance against historical biases ingrained in data systems. By recognizing these patterns, communities can advocate for more transparent practices in law enforcement, driving forward a narrative that not only protects civil liberties but also demands accountability in our increasingly surveilled society.

References

- https://arxiv.org/html/2504.18629

- https://www.brownjppe.com/vol-4-issue-2/predictive-algorithms-in-the-criminal-justice-system:-evaluating-the-racial-bias-objection

- https://www.crimejusticejournal.com/article/download/2189/1195

- https://www.cbcfinc.org/wp-content/uploads/2022/04/2022_CBCF_CPAR_TheUnintendedConsequencesofAlgorithmicBias_Final.pdf

- https://jhulr.org/2025/01/01/algorithmic-justice-or-bias-legal-implications-of-predictive-policing-algorithms-in-criminal-justice/

- https://en.wikipedia.org/wiki/Predictive_policing

- https://www.cigionline.org/articles/the-promises-and-perils-of-predictive-policing/

- https://www.cogentinfo.com/resources/predictive-policing-using-machine-learning-with-examples

- https://algorithmwatch.org/en/algorithmic-policing-explained/

- https://law.yale.edu/sites/default/files/area/center/mfia/document/infopack.pdf

- https://www.oxjournal.org/predictive-policing-or-predictive-prejudice/

- https://pwestpathfinder.com/2021/04/05/behind-the-numbers-history-and-bias-in-high-tech-criminal-justice/

- https://nyulawreview.org/wp-content/uploads/2019/06/NYULawReview-94-3-ODonnell.pdf

- https://www.bu.edu/articles/2023/do-algorithms-reduce-bias-in-criminal-justice/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9744975/

- https://hrdag.org/2019/11/01/predictive/

- https://www.sentencingproject.org/reports/one-in-five-disparities-in-crime-and-policing/

- https://www.ppic.org/publication/racial-disparities-in-law-enforcement-stops/

- https://www.math.ucla.edu/~bertozzi/papers/BrantinghamMohlerA.pdf

- https://naacp.org/resources/artificial-intelligence-predictive-policing-issue-brief

Leave a Reply