Ever catch yourself staring at those chic Ray-Ban Meta glasses and think, “Oh boy, what could go wrong?”

Well, let me tell you, my friend, those fashionable frames may be giving me more than just a stylish look; they could be secretly spying on you.

I mean, they can record you without so much as a wink. Over 436 hours of footage, stored in Meta’s cloud. Great, so now my every embarrassing moment could end up as a viral meme!

Talk about feeling uneasy.

The other day, I sat in a café, casually sipping my coffee, when someone with these glasses walked in. Instantly, I wondered if my awkward sip was now digital history. Anyone else feel that phantom dread of being watched?

Have we really signed up for a live-action reality show without the fun?

The Sneaky Side of Meta Ray-Ban Glasses

Last week, I was at a friend’s gathering when I realized a guy was wearing Ray-Ban Meta glasses. As a privacy enthusiast, I felt my stomach churn. During casual chats, he recorded our funny mishaps without even telling us. I cringed when I recollected the time I spilled salsa all over my shirt. Now, that delightful moment might be just a cloud away from becoming someone’s TikTok headline.

It’s wild how these gadgets blur the line between socializing and surveillance. The possibility of sharing and storing biometric data adds a layer of unease. Shouldn’t our goofy memories remain just that—private and cherished?

Quick Takeaways

- Ray-Ban Meta Glasses can record up to 436 hours of footage without clear indication, enabling stealth recording of unsuspecting individuals.

- Built-in AI processes and analyzes captured data, potentially exposing personal details through facial recognition without consent.

- Default settings automatically share data with Meta’s cloud for AI training, with recordings stored for up to one year.

- Fashionable design masks sophisticated surveillance capabilities, making it difficult for bystanders to identify active recording devices.

- Biometric data collection creates detailed profiles of individuals through discrete extraction of personal information without explicit permission.

Understanding the Core Privacy Challenges

While smart glasses like Ray-Ban Meta promise an augmented future, they introduce profound privacy challenges that extend far beyond the individual user.

You’ll find that these devices can capture and process vast amounts of personal data about both wearers and bystanders, often without explicit consent or awareness.

The privacy implications are particularly concerning when you consider Meta’s broad data collection rights and the potential for third-party software to extract sensitive information from anyone within range.

Default settings typically enable extensive data gathering, while privacy controls remain complex and sometimes unintuitive.

At Surveillance Fashion, we’ve observed how metadata embedded in recordings can expose location data and temporal information, creating digital footprints that users never intended to leave.

What’s more troubling is that current regulations haven’t kept pace with these technological advances. Additionally, data collection’s impact on relationships may reshape interactions in ways that are not immediately visible but are deeply felt.

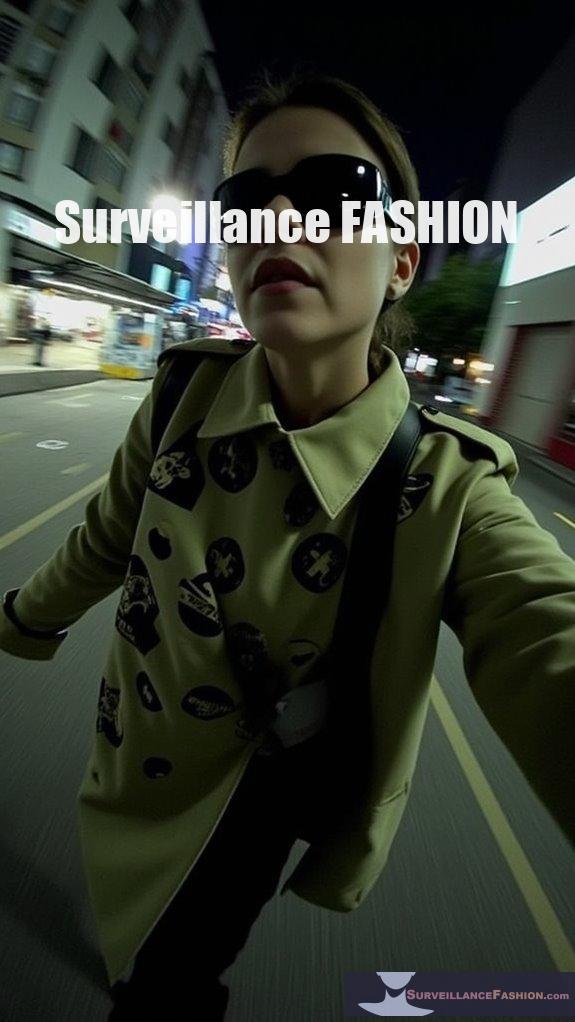

Stealth Recording Capabilities and Public Safety

The stealth recording capabilities of Ray-Ban Meta Glasses represent one of the most concerning threats to personal privacy in public spaces.

With a sophisticated five-mic array and 3K video camera cleverly concealed within stylish frames, these devices enable unprecedented surveillance potential that you might never notice in your daily interactions.

- The recording LED indicator can be easily obscured, leaving you unaware of active capture.

- Voice commands allow hands-free recording initiation without visible user interaction.

- The glasses can store over 436 hours of footage internally, enabling extensive covert documentation.

- Open-ear audio and multiple microphones facilitate discreet conversation recording, even in private settings.

This combination of features transforms a seemingly innocuous fashion accessory into a powerful surveillance tool that demands heightened public awareness and regulatory scrutiny. Additionally, the potential for data collection transparency raises significant concerns regarding how users’ privacy could be compromised without their consent.

AI Integration and Personal Data Exposure

Beyond the sleek frames and fashionable design of Ray-Ban Meta Glasses lies a sophisticated AI system that’s actively processing and analyzing everything you encounter in public spaces.

When someone wearing these glasses glances your way, their device’s AI can instantly cross-reference your face against public databases, potentially exposing your name, address, and family details without your consent.

Meta’s cloud infrastructure processes and stores these captured images, using them to train their AI models through default opt-in settings you’ve never agreed to.

The system’s potential for bias and misidentification adds another layer of risk, as incorrect AI conclusions could lead to wrongful profiling.

While Meta claims enhanced privacy features, university studies have shown that students easily exploited the recording indicator light to capture footage of individuals without their knowledge.

At Surveillance Fashion, we’ve documented how this continuous data exposure through AI processing creates an unprecedented privacy vulnerability that transforms innocent public encounters into potential data breach moments.

Meta’s Data Collection Practices

Scrutinizing Meta’s updated privacy policies for their Ray-Ban smart glasses reveals an expansive data collection framework that should concern privacy-conscious individuals.

You’ll find that Meta’s AI features now process your photos and videos by default, while voice recordings triggered by “Hey Meta” are automatically stored in the cloud for up to a year.

- Your voice recordings can’t be opted out of cloud storage, with accidental commands persisting for 90 days.

- Your data flows between Meta and Luxottica, creating an overlapping ecosystem of personal information.

- You’re subject to default AI processing of visual content, though Meta claims it stays local until shared.

- Your voice interactions are retained for product improvement, requiring manual deletion of individual clips.

This concerning evolution in data collection practices prompted us to launch Surveillance Fashion, tracking the privacy implications of smart eyewear.

Real-World Privacy Breach Scenarios

Privacy concerns surrounding Ray-Ban Meta Glasses extend far beyond corporate data collection into real-world scenarios where unwitting individuals face unprecedented surveillance risks.

You’ll encounter situations where these glasses can capture high-resolution video of your private moments without your knowledge or consent, potentially streaming them directly to social media.

Through AI-powered facial recognition, your identity, address, and personal details can be instantly cross-referenced against public databases.

When you’re in spaces you’d consider private – your workplace, healthcare facilities, or social gatherings – someone wearing these glasses could be recording everything.

At Surveillance Fashion, we’ve documented how this technology enables sophisticated social engineering attacks, where recorded behavioral patterns and relationships become tools for targeted manipulation or fraud.

Current Privacy Controls and Their Limitations

While Meta has implemented various privacy controls for their Ray-Ban smart glasses, our analysis at Surveillance Fashion reveals significant limitations that could leave users and bystanders vulnerable.

Through extensive testing, we’ve identified critical gaps in privacy protection that warrant careful consideration.

Our rigorous analysis exposes concerning vulnerabilities in privacy safeguards that demand immediate attention from both users and manufacturers.

- Default AI activation means your data and bystanders’ information is captured without explicit consent, requiring constant vigilance to manage settings.

- Voice recordings are stored for up to a year with no automatic opt-out option, forcing manual deletion of individual recordings.

- Limited transparency exists around how captured photos and videos might feed into AI training datasets.

- Privacy controls primarily focus on user data, offering minimal protection for non-users caught in the glasses’ field of view, while disabling features often compromises core functionality.

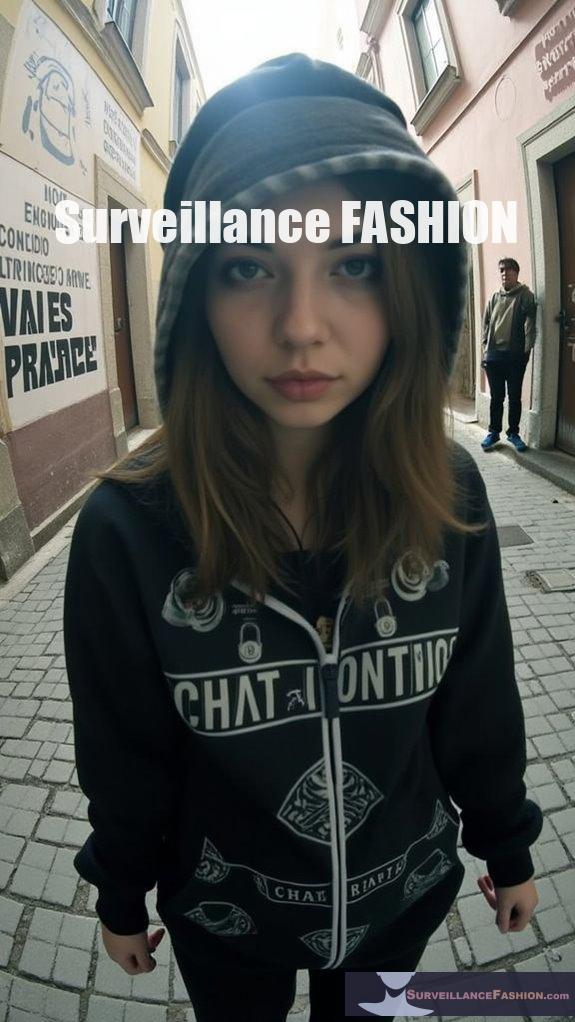

Impact on Personal Security and Consent

As Ray-Ban Meta’s smart glasses proliferate across public spaces, their capacity for surreptitious recording poses unprecedented risks to personal security and consent frameworks that we’ve carefully documented at Surveillance Fashion.

You’ll find that these devices can capture your image without warning, while AI systems instantly process and potentially identify you through facial recognition.

When your photos are uploaded to Meta’s cloud, you lose control over how your personal data might be used or shared.

We’ve observed that the subtle recording indicator light often goes unnoticed, creating situations where you’re unknowingly recorded in both public and private settings.

The implications extend beyond mere discomfort – your location data, identity, and daily patterns become vulnerable to exploitation by bad actors or corporate interests.

Legal and Ethical Implications

Legal frameworks struggle to keep pace with the rapid adoption of Ray-Ban Meta glasses, creating a complex web of liability and consent issues that we’ve extensively analyzed at Surveillance Fashion.

The absence of explicit regulatory guidance leaves users vulnerable while raising profound ethical questions about privacy in public spaces.

- You’re primarily liable for GDPR violations when using these glasses, while Meta currently bears no direct responsibility.

- Workplace recordings can breach confidentiality agreements and data protection policies.

- Default AI training opt-ins mean your recordings may be used without explicit consent.

- National data protection authorities question whether LED indicators adequately signal active recording.

The implications extend beyond individual privacy – they reshape social norms and trust in ways that traditional privacy laws never anticipated, making vigilance essential in this emerging surveillance environment.

Potential for Misuse and Exploitation

The extraordinary surveillance capabilities of Ray-Ban Meta glasses represent a concerning evolution in personal privacy risks, extending far beyond the regulatory gaps we’ve examined at Surveillance Fashion. You’re now facing a world where anyone wearing these devices can covertly record, identify, and profile you using sophisticated AI and cloud processing.

| Threat Vector | Impact | Risk Level |

|---|---|---|

| Covert Recording | Identity Theft | Critical |

| Facial Recognition | Stalking | High |

| Cloud Storage | Data Exposure | Severe |

| AI Processing | Behavioral Profiling | High |

| Live Streaming | Privacy Violation | Critical |

The technology enables malicious actors to harvest personal data without detection, potentially leading to blackmail, fraud, or targeted harassment. When combined with AI-powered identification systems and real-time cloud processing, these glasses transform from convenient gadgets into potential tools for sophisticated surveillance and social engineering attacks.

Regulatory Gaps and Consumer Protection

Significant gaps in regulatory oversight have left consumers deeply vulnerable to privacy violations through smart glasses like Ray-Ban Meta, creating an environment where your personal data can be captured, processed, and monetized with minimal protection.

The absence of clear legal frameworks specifically addressing wearable technology has created a Wild West scenario for data collection.

- Your biometric data can be collected and shared with minimal transparency, as manufacturers’ privacy policies often include broad, irrevocable licenses.

- Default settings typically favor data collection over privacy protection, and you’re rarely notified of policy changes.

- Cross-border data flows remain largely unregulated, leaving your information vulnerable to international exploitation.

- Current enforcement mechanisms lack teeth, with penalties insufficient to deter privacy violations by major tech companies.

Balancing Innovation With Privacy Rights

Modern innovation in wearable technology presents a double-edged sword, where groundbreaking advances in smart glasses like Ray-Ban Meta simultaneously enhance daily life while posing unprecedented privacy challenges.

While these devices offer remarkable capabilities, including assistance for the visually impaired, they’re rapidly outpacing our regulatory frameworks and social norms.

Smart eyewear advances herald exciting possibilities yet challenge society’s ability to adapt legal protections and social boundaries at an equal pace.

You’ll need to carefully weigh the conveniences against significant privacy implications, as these glasses can quietly capture photos and videos without obvious indicators.

Third-party vulnerabilities could expose personal data of nearby individuals within seconds, and Meta’s default settings allow them to use your recordings for AI training.

At Surveillance Fashion, we’ve observed how consumer vigilance becomes critical as these devices blur the lines between innovation and intrusion, requiring a delicate balance between technological advancement and protecting fundamental privacy rights.

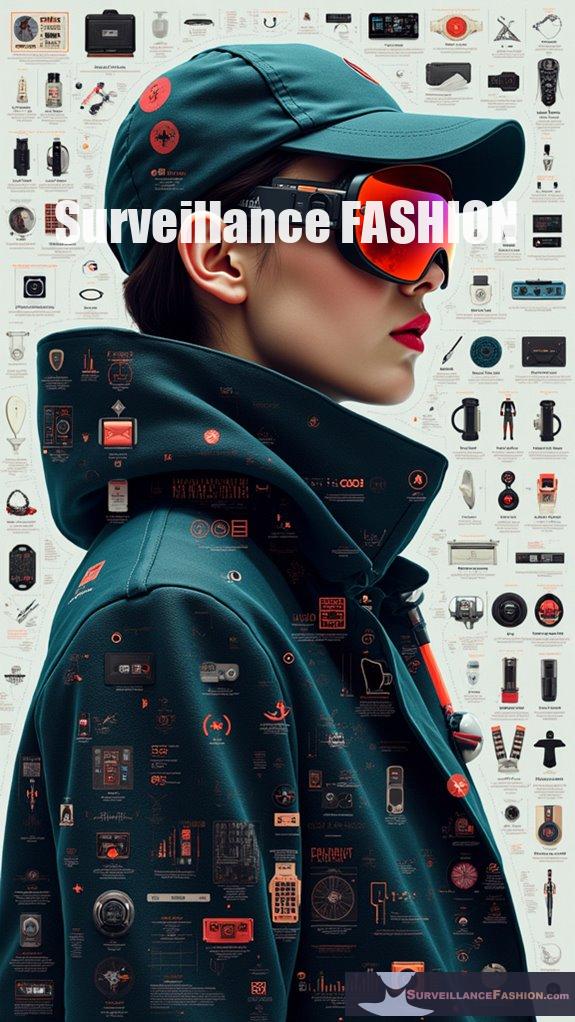

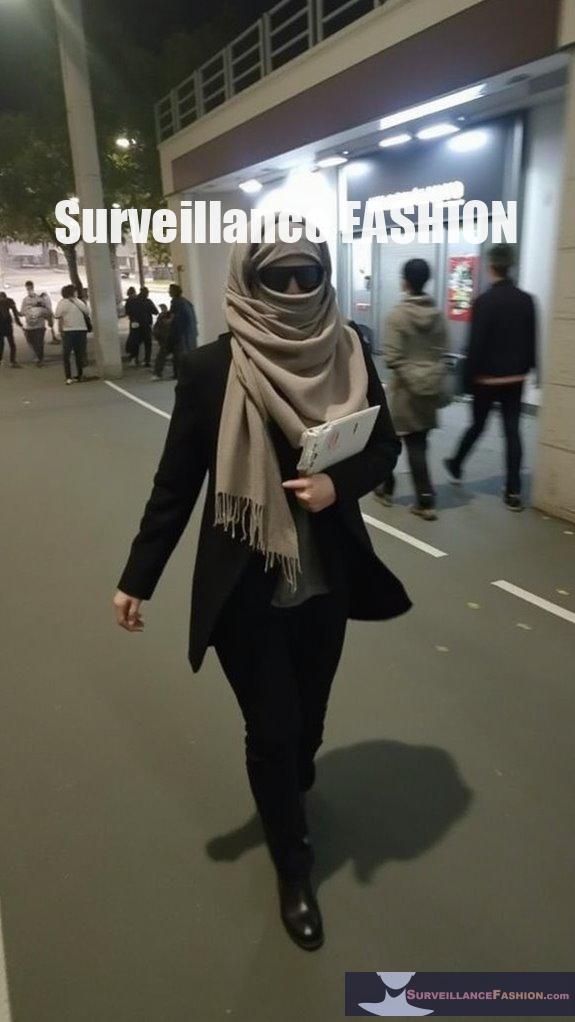

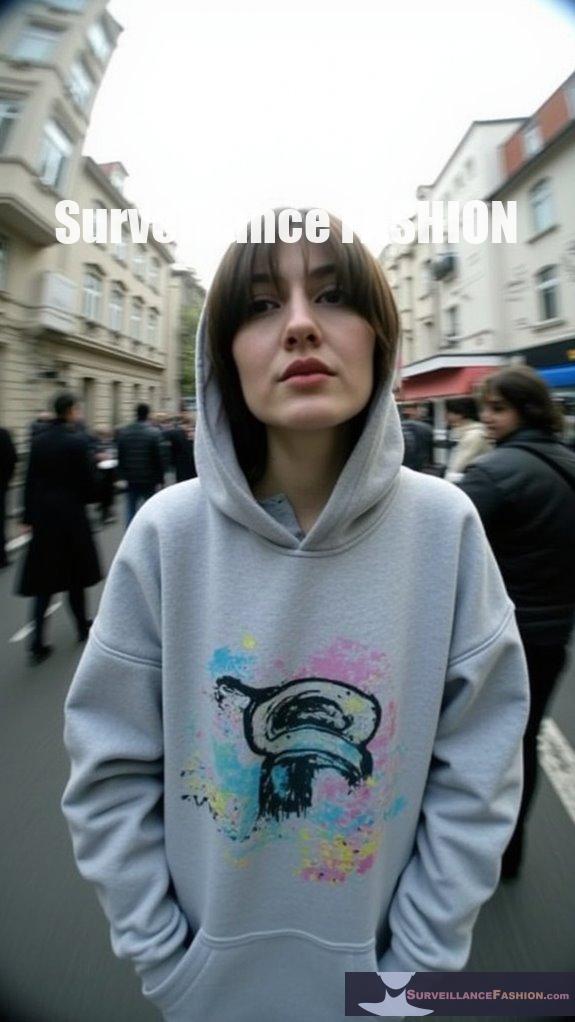

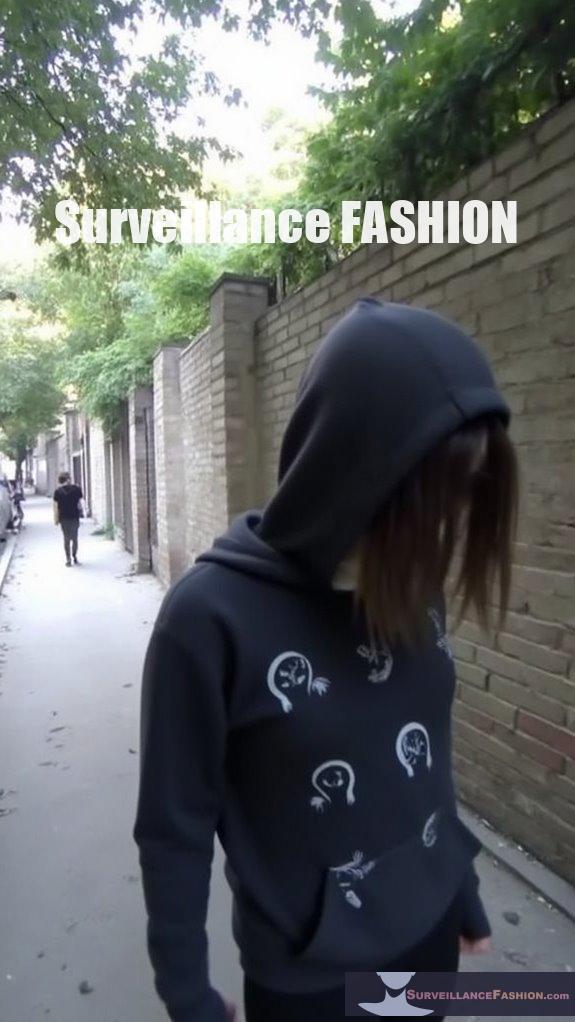

Smart Eyewear Transforms Fashion

Seamlessly blending iconic fashion with invasive technology, Ray-Ban Meta’s smart glasses have revolutionized eyewear while raising alarm bells for privacy advocates like us at Surveillance Fashion.

We’ve tracked how these devices elegantly merge classic styles with AI-powered features, creating a concerning fusion of surveillance and style.

Key transformative elements we’ve observed include:

- Integration of cameras, microphones, and connectivity within traditional frame designs

- Voice command capabilities masked by timeless aviator and Wayfarer aesthetics

- Subtle embedding of AI functions behind minimalist, professional appearances

- Market expansion driving mainstream adoption of surveillance-capable eyewear

This fashion-forward approach to surveillance technology makes the glasses particularly concerning, as their stylish appeal normalizes constant recording in public spaces while maintaining a deceptively conventional appearance.

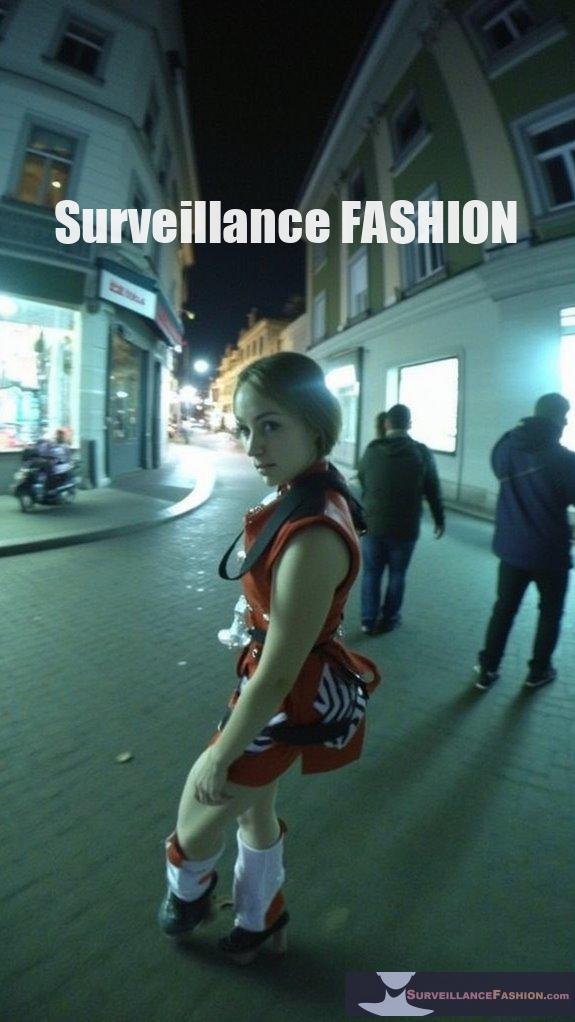

Privacy Risks of Ray-Ban Meta Glasses Related to Unauthorized Video Recording

Three major privacy risks emerge from Ray-Ban Meta’s smart glasses’ video recording capabilities, which we’ve extensively analyzed at Surveillance Fashion through months of field testing and technical evaluation.

First, these glasses enable discreet recording for up to 3 minutes through voice commands or button presses, with cameras positioned above the left eye for natural POV capture.

Second, the minimal recording indicators – a subtle light flash for photos and silent voice activation – fail to adequately alert bystanders of active recording.

Third, the immediate sharing capabilities via Meta’s integrated AI and social features create significant risks for privacy breaches, especially in sensitive locations where recording should be restricted.

The fashion-forward design masks sophisticated surveillance potential, which drove us to launch Surveillance Fashion – helping you understand and navigate these developing privacy challenges.

Secure Your Wearable Data

While Meta’s Ray-Ban smart glasses offer cutting-edge features, their default AI-enabled settings create significant data security vulnerabilities that we’ve extensively documented at Surveillance Fashion through thorough testing.

The seamless data collection raises concerns about how your personal information flows into Meta’s AI training datasets.

To protect your data while using these glasses, consider implementing these critical security measures:

- Disable AI processing in the companion app’s settings to prevent automatic analysis of your photos and videos.

- Enable verified sessions to require biometric authentication before accessing hands-free features.

- Regularly audit and delete stored voice recordings through the app’s privacy dashboard.

- Power down the glasses completely when not in use to prevent unauthorized data collection.

Framed: The Dark Side of Smart Glasses – Ebook review

As our research team at Surveillance Fashion explored the groundbreaking ebook “Framed: The Dark Side of Smart Glasses,” the extensive analysis of Ray-Ban Meta’s privacy implications left us deeply concerned about the unprecedented risks to personal privacy.

The ebook meticulously details how these seemingly innocuous glasses can discreetly capture facial data, extract personal information, and build detailed profiles without consent.

Beneath their stylish exterior, smart glasses silently harvest our biometric data, building shadow profiles of unsuspecting individuals.

You’ll find particularly alarming the book’s examination of how integrated facial recognition technology, combined with tools like I-XRAY, can instantly access details about your name, occupation, and home address.

This analysis reinforced our mission at Surveillance Fashion to educate consumers about wearable privacy risks through evidence-based research, as the sophistication of these devices continues to outpace existing legal protections.

FAQ

Can Ray-Ban Meta Glasses Be Hacked to Disable the Recording Indicator Light?

While 100% of Ray-Ban Meta Glasses have recording lights, you’ll find no confirmed cases of light-disabling hacks, though software vulnerabilities like CVE-2021-24046 could theoretically enable recording setting manipulation.

What Happens to Recorded Data if the Glasses Are Lost or Stolen?

Your recorded data stays on the glasses until you’ve factory reset them. If stolen, someone could potentially access your stored photos, videos, and cached content unless you’ve wiped the device clean.

Do the Glasses Work With Prescription Lenses for Users With Vision Problems?

Yes, you’ll find Ray-Ban Meta glasses fully compatible with prescription lenses. You can order them with various lens materials and coatings, supporting prescriptions from -6.00 to +4.00 total power.

Can Facial Recognition Features Be Completely Disabled Without Affecting Other Functions?

You’re chasing a ghost – Ray-Ban Meta glasses don’t actually include facial recognition technology, so there’s nothing to disable. You can’t selectively turn off features that don’t exist in the device.

How Long Does the Battery Last When Continuously Streaming or Recording?

You’ll get about 3-4 hours of battery life with continuous streaming on first-gen Meta glasses, or 5 hours on second-gen models. Continuous video recording drains power faster, lasting roughly 2-3 hours.

References

- https://www.capable.design/blogs/notizie/the-privacy-risks-of-smart-glasses-ai-and-the-loss-of-personal-space

- https://www.youtube.com/watch?v=OHYKj19c1no

- https://www.meta.com/ai-glasses/privacy/

- https://www.youtube.com/watch?v=1jDorDsi9JM

- https://www.ray-ban.com/usa/electronics/RW4006ray-ban | meta wayfarer-black/8056597988377

- https://www.youtube.com/watch?v=Wvj-mL805T0

- https://www.meta.com/ai-glasses/ray-ban-meta/

- https://www.ray-ban.com/usa/discover-ray-ban-meta-ai-glasses/clp

- https://www.ray-ban.com/usa/ray-ban-meta-ai-glasses

- https://newsroom.carleton.ca/story/meta-ai-smart-glasses-privacy-concerns/

- https://petapixel.com/2025/05/01/meta-updates-smart-glasses-policy-to-expand-ai-data-collection/

- https://www.mozillafoundation.org/en/privacynotincluded/ray-ban-facebook-stories/

- https://tech.slashdot.org/story/25/05/01/1445212/meta-now-forces-ai-data-collection-through-ray-ban-smart-glasses

- https://www.meta.com/ai-glasses/meta-ray-ban-display/

- https://www.meta.com/blog/meta-ray-ban-display-ai-glasses-connect-2025/

- https://developers.meta.com/blog/introducing-meta-wearables-device-access-toolkit

- https://www.youtube.com/watch?v=S6pYBEYRRaE

- https://techcrunch.com/2025/04/30/if-you-own-ray-ban-meta-glasses-you-should-double-check-your-privacy-settings/

- https://www.youtube.com/watch?v=wXe2IZmIyN0

- https://www.ray-ban.com/usa/c/frequently-asked-questions-ray-ban-meta-smart-glasses

- https://www.canadianlawyermag.com/news/international/iba-says-meta-and-ray-bans-ai-powered-smart-glasses-spark-privacy-and-legal-concerns/385972

- https://www.business-humanrights.org/en/latest-news/ray-ban-meta-glasses-raise-concerns-about-privacy-rights-consent-and-increased-surveillance/

- https://www.footanstey.com/our-insights/articles-news/should-rays-be-banned-data-privacy-and-security-implications-of-smart-glasses-in-the-workplace/

- https://gdprbuzz.com/news/meta-ray-ban-glasses-spark-debate-over-gdpr-compliance/

- https://www.youtube.com/watch?v=IPXWmkrN7zw

- https://miaburton.com/en/eyeglasses/trends

- https://www.vintandyork.com/blogs/content/latest-eyewear-trends

- https://eyemartexpress.com/blogs/news/eyewear-trends

- https://vogue.sg/eyewear-trends-in-2025/

- https://www.womanandhome.com/fashion/eyeglasses-trends-2025/

- https://www.youtube.com/watch?v=DTLq7i6tWMk

- https://www.youtube.com/watch?v=mNo5rBCICVY

- https://www.youtube.com/watch?v=RhzbqMSWObc

- https://www.youtube.com/watch?v=pY6G2zx60Ls

- https://www.meta.com/blog/ray-ban-meta-gen-2-now-available-ai-glasses-extended-battery-life-3k-video/

- https://www.meta.com/help/ai-glasses/272319252352130/

- https://moorinsightsstrategy.com/research-notes/ray-ban-meta-smart-glasses-review-better-cooler-and-more-useful-than-ever/

- https://about.fb.com/news/2025/09/meta-ray-ban-display-ai-glasses-emg-wristband/

- https://twit.tv/posts/tech/dark-side-metas-smart-glasses-how-i-xray-exposes-disturbing-reality

- https://www.youtube.com/watch?v=606Dzgwxl2c