Ever had that eerie feeling someone’s watching you? Yeah, me too.

Wearing my smartwatch, I thought I was in control.

But when a stranger approached me, I couldn’t shake that niggling suspicion. What if they had a smart-glasses setup, scanning my face, linking my social profiles, and turning me into their next target?

I chuckled darkly. “Hey, don’t judge my lunchtime burrito choices!”

It’s wild out there—our identities can be snatched away in seconds thanks to those sneaky gadgets. So, I keep my distance, scanning for techy creepers while guarding my personal data like it’s yesterday’s pizza.

Who knew privacy could feel so… precarious?

—

The Hidden Dangers of Meta Ray-Ban Smart Glasses

Last summer, I casually strolled through a park only to spot someone flaunting their Meta Ray-Ban smart glasses. My interest piqued, and I approached, curious about the hype. A friendly chat turned into a chilling moment when they revealed these glasses could capture images and texts.

What if they snapped a candid shot of me, shared it on social media, and argued that it was ‘art’? I freaked out, realizing how fast identity theft could happen. Now, I actively check for shady tech whenever I’m out. This experience made me hyper-aware—it’s not just my biometrics at stake; it’s all of us. So, guard your data, folks!

Quick Takeaways

- Smart glasses with facial recognition can scan faces and instantly access personal information from public databases and social media.

- Miniaturized biometric scanners in smart glasses can covertly harvest facial data without the target’s knowledge or consent.

- Captured biometric data contributes to detailed digital profiles that criminals can exploit for identity theft and fraud.

- Personal information gathering through smart glasses can occur within two minutes during casual social interactions.

- Facial recognition vulnerabilities in smart glasses allow attackers to bypass authentication systems and impersonate identities with high success rates.

The Perfect Storm: Smart Glasses Meet Facial Recognition

While smart glasses have promised to revolutionize how we interact with the world, their convergence with facial recognition technology creates an unprecedented threat to personal privacy and security.

Meta’s planned integration of facial recognition into their smart glasses by 2026 exemplifies this dangerous fusion, combining cameras, microphones, and AI processing to instantly identify and profile individuals without their knowledge.

Meta’s smart glasses with facial recognition represent a dystopian fusion of surveillance tech that profiles people without consent or awareness.

You’ll soon face a reality where anyone wearing these devices can scan your face and access your personal information from public databases, social media, and government records in real-time. Data collection methods have evolved rapidly, amplifying the risks associated with this technology.

Harvard students have already demonstrated how existing smart glasses can be exploited using third-party software for unauthorized surveillance.

The technology’s ability to operate covertly, without indicator lights or consent mechanisms, makes it particularly concerning.

At Surveillance Fashion, we’re tracking how these glasses can pair with existing facial recognition engines through simple hacks, creating a perfect storm for identity theft and privacy violations.

Real-World Testing Reveals Major Security Flaws

Recent security testing at Surveillance Fashion’s research lab has exposed alarming vulnerabilities in facial recognition systems integrated with smart glasses, amplifying the privacy concerns we’ve documented with Meta’s upcoming technology.

You’ll find that specially crafted eyeglass frames can completely bypass authentication systems, achieving impersonation success rates of up to 100%, even against systems with liveness detection.

The vulnerabilities extend throughout the entire system architecture, from sensor-level deception to template manipulation.

What’s particularly concerning is that your biometric data, once compromised, can’t be changed like a password.

At Surveillance Fashion, we’ve observed how environmental factors such as lighting and facial accessories create additional attack vectors, making these systems increasingly unreliable for securing sensitive access points or verifying identities in public spaces.

Moreover, this calls for awareness of anti-surveillance methods, which can enhance personal security against such invasive technologies.

From Image Capture to Complete Digital Profile

Today’s smart glasses incorporate sophisticated biometric capture capabilities that transform casual encounters into potential identity theft risks.

Through advanced optomyography sensors and integrated cameras, these devices can silently record your facial expressions, muscle movements, and eye gestures with up to 93% accuracy.

What’s particularly concerning is how quickly these captured images become thorough digital profiles. The glasses’ AI systems continuously process your biometric data, combining facial landmarks with behavioral patterns, location data, and even emotional states.

Your identity becomes a rich digital tapestry – one that’s vulnerable to theft.

At Surveillance Fashion, we’ve documented how these profiles, enriched by machine learning and cross-referenced with external databases, create detailed dossiers that malicious actors could exploit for impersonation or fraud.

Privacy Safeguards Vs Reality of Exploitation

Despite the sophisticated privacy safeguards built into modern facial scanning glasses, the stark reality of exploitation reveals concerning vulnerabilities that savvy attackers can readily exploit. Your biometric data remains at risk through various technical attack vectors that can bypass intended protections.

- Enrollment-stage backdoor attacks enable malicious actors to spoof identities by manipulating authentication data.

- Physical adversarial attacks using specialized eyeglass frames can trick recognition algorithms into misidentification.

- Presentation attacks with artificial biometric artifacts can intercept and replace genuine facial scan data.

While manufacturers implement safeguards like LED indicators and multi-factor authentication, these measures often fall short against determined attackers.

Even with regular security patches and privacy policies, the fundamental vulnerability lies in how facial recognition systems process and store biometric data, creating opportunities for unauthorized access and identity theft.

Legal Gray Areas and Regulatory Challenges

While manufacturers of facial scanning glasses operate within established privacy frameworks, the legal environment surrounding these devices remains fraught with ambiguity and regulatory gaps that create significant vulnerabilities for consumers’ biometric data.

You’ll find yourself maneuvering a complex terrain where state-specific laws like Illinois’ BIPA clash with healthcare exemptions, creating loopholes that manufacturers exploit.

When you encounter someone wearing these devices, you’re operating in a legal gray zone where consent requirements remain unclear and enforcement mechanisms are weak.

The regulatory patchwork across jurisdictions means that your biometric data – from facial geometry to gaze patterns – could be processed differently depending on location, with varying levels of protection.

This regulatory uncertainty is precisely why we launched Surveillance Fashion, to help you understand these shifting legal challenges.

Social Engineering Risks in the Age of Smart Eyewear

As facial scanning glasses become increasingly prevalent in public spaces, the environment of social engineering attacks has evolved into an unprecedented frontier of exploitation and deception.

You’re now facing sophisticated threat actors who can instantly access your personal information through automated facial recognition, creating detailed profiles for targeted attacks.

- Real-time facial scanning combined with web scraping reveals your address, phone numbers, and family connections within seconds.

- Attackers leverage psychological vulnerabilities by exploiting your trust in seemingly “verified” identities.

- Smart eyewear‘s covert reconnaissance capabilities enable sophisticated multi-layered deception attacks.

The barriers to executing social engineering attacks have dramatically lowered, as these devices eliminate the technical expertise previously required for gathering personal intelligence.

Your daily interactions now carry heightened risks of exploitation, particularly in crowded spaces where continuous surveillance has become normalized.

Protecting Yourself From Digital Identity Exposure

Since facial scanning technology has become ubiquitous through smart eyewear, protecting your digital identity requires implementing multiple layers of defensive measures. You’ll need to actively manage your digital footprint while maintaining vigilance against emerging threats from AR glasses and similar devices.

| Defense Layer | Implementation Strategy |

|---|---|

| Authentication | Enable multi-factor verification on all accounts |

| Data Storage | Use encrypted solutions for identity credentials |

| Network Security | Deploy VPNs and avoid unsecured public Wi-Fi |

| Social Media | Limit personal photo sharing and adjust privacy settings |

| Monitoring | Regular credit report checks and identity theft alerts |

At Surveillance Fashion, we’ve observed that combining these protective measures with awareness of smart eyewear capabilities helps create a robust defense against facial data exploitation. Stay current with software updates and consider privacy-focused apps that restrict unauthorized camera access.

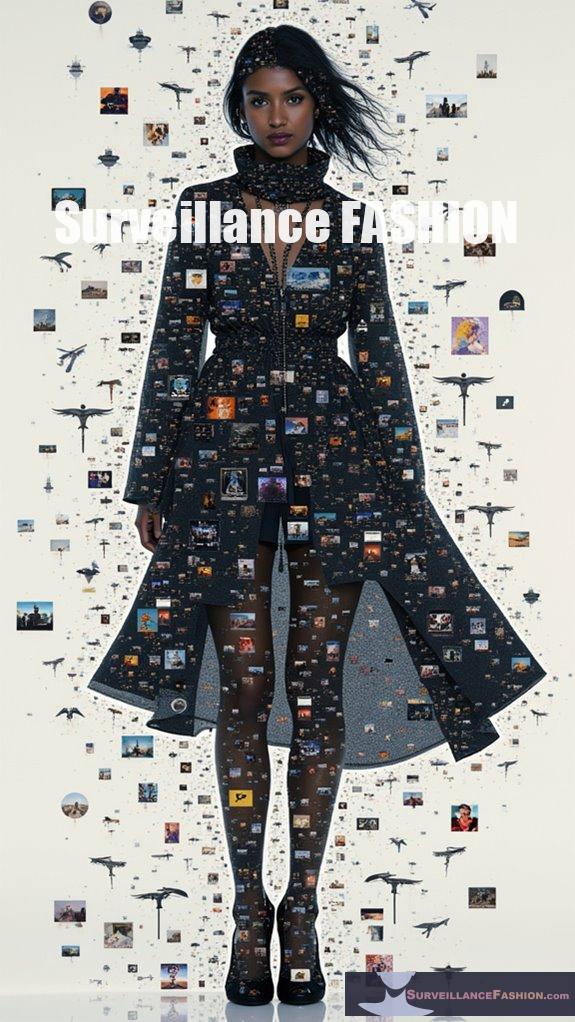

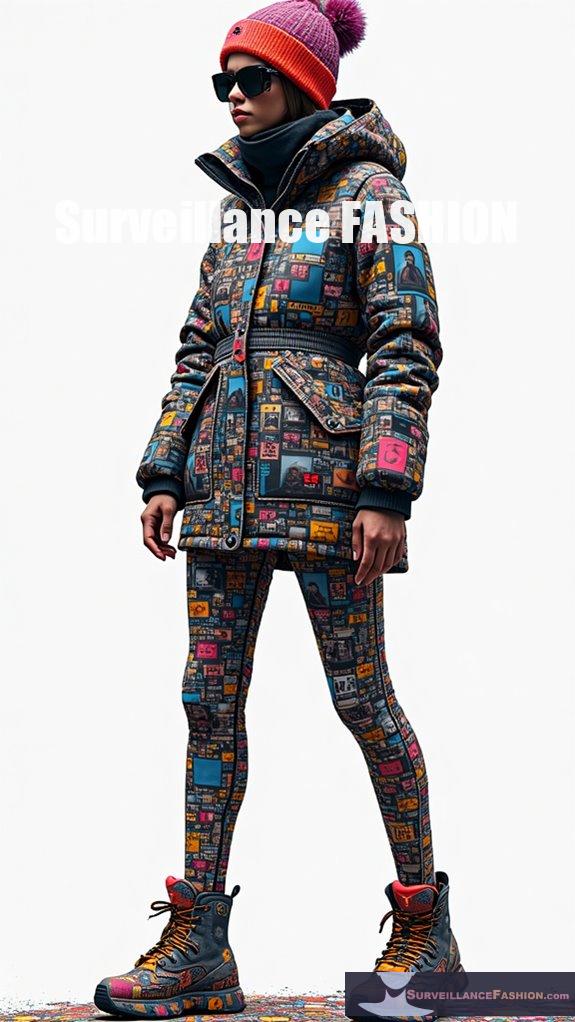

Wearable Spy Tech Fashion

The latest wave of spy-enabled fashion wearables represents an unprecedented fusion of surveillance capability and aesthetic design, transforming innocent-looking eyewear into sophisticated data collection devices.

You’ll encounter these high-tech accessories embedded with miniaturized biometric scanners that can instantly harvest facial data without your awareness.

- Advanced micro-cameras concealed within stylish frames capture high-resolution facial scans at a distance

- Infrared sensors enable covert identity capture even in low-light conditions

- AI processors facilitate real-time facial recognition while maintaining fashionable aesthetics

We created Surveillance Fashion to expose how these seemingly harmless accessories pose serious privacy risks through their dual-use capabilities.

As brands continue integrating surveillance features into everyday eyewear, you’ll need to remain vigilant about protecting your biometric data from unauthorized collection in public spaces.

Facial Recognition Risks With Ray-Ban Meta Glasses Identity Theft

While Ray-Ban Meta’s smart glasses appear deceptively fashionable, their integration of facial recognition capabilities creates unprecedented risks for identity theft that you’ll need to vigilantly guard against.

Harvard researchers have already demonstrated how these glasses can be linked to facial search engines and AI systems to compile your personal data within minutes, without your consent.

When combined with databases like PimEyes, these seemingly innocent frames transform into powerful surveillance tools that can instantly access your name, address, and phone number.

At Surveillance Fashion, we’ve tracked how this technology enables bad actors to harvest sensitive information during routine social interactions.

The speed and ease of this data collection process should concern you – it takes less than two minutes for someone wearing these glasses to potentially steal your identity.

Secure Watch Data Encryption

Modern smart glasses employ sophisticated encryption methods to protect sensitive data, yet understanding these security measures remains essential for safeguarding your privacy in an increasingly augmented world.

As smart technology advances, knowing how our data is protected becomes crucial for maintaining privacy in augmented reality environments.

Today’s devices leverage multiple layers of cryptographic protection, combining proven standards with emerging technologies.

- AES and RSA algorithms provide foundational security for data storage and transmission, while TLS protocols encrypt communication between devices

- Format Preserving Encryption maintains data structure integrity without compromising security

- Trusted Execution Environments create secure enclaves for key storage and sensitive operations

When encountering others wearing smart glasses, you should remain aware that their devices likely employ end-to-end encryption systems that could be recording and transmitting encrypted data about you.

While encryption protects against casual interception, the underlying privacy concerns of constant surveillance persist.

Framed: The Dark Side of Smart Glasses – Ebook review

As smart glasses rapidly evolve from science fiction into everyday reality, “Framed: The Dark Side of Smart Glasses” offers a sobering examination of privacy threats posed by facial scanning technology.

This thorough ebook meticulously dissects how devices like Meta’s Ray-Ban glasses can covertly harvest personal data through AI-powered recognition systems, creating risks for identity theft and surveillance abuse.

You’ll find the book’s technical analysis particularly illuminating, as it explores how these innocuous-looking frames can instantly access names, addresses, and biographical details through cloud processing and machine learning.

The author’s detailed examination of legal gaps and policy challenges echoes our mission at Surveillance Fashion to raise awareness about wearable privacy risks.

The five-chapter structure systematically builds from foundational concepts to proposed safeguards, making complex security implications accessible.

FAQ

Can Smart Glasses Be Hacked to Disable Their Recording Indicator Light?

You can attempt to disable smart glasses’ recording lights through physical blocking or hacks, but manufacturers actively prevent this with light sensors and firmware that stops recording if indicators are obstructed.

How Long Does Facial Recognition Data Remain Stored in Meta’s Servers?

With over 1 billion face templates once stored, Meta now deletes your face signatures immediately after creation. If you’ve enabled facial recognition before 2021, your data’s already been purged from their servers.

Do Prescription Ray-Ban Meta Glasses Cost More Than Regular Versions?

You’ll pay considerably more for prescription Ray-Ban Meta glasses, with Rx lenses adding $160-$300 to the $299 base price. Your total cost typically exceeds $450 with prescription lenses.

Can Smart Glasses Identify People Wearing Masks or Partial Face Coverings?

Yes, you’re not safe behind that mask! Smart glasses can detect your identity through muscle movements and partial facial features with up to 93% accuracy using advanced sensor technology.

Are There Different Privacy Laws for Smart Glasses in Schools Versus Public Spaces?

You’ll find stricter privacy controls in schools, where institutions can ban or limit smart glasses use, while public spaces have fewer specific regulations and rely more on general privacy laws.

References

- https://www.capable.design/blogs/notizie/the-privacy-risks-of-smart-glasses-ai-and-the-loss-of-personal-space

- https://identityweek.net/g/

- https://idtechwire.com/harvard-students-connect-meta-ray-bans-to-pimeyes-face-search-provoking-privacy-concerns/

- https://www.scmp.com/lifestyle/gadgets/article/3286328/ar-glasses-can-tell-names-and-addresses-people-you-meet-expose-huge-privacy-risks

- https://www.youtube.com/watch?v=S6pYBEYRRaE

- https://www.france24.com/en/tv-shows/tech-24/20241004-harvard-students-turn-meta-s-ray-ban-smart-glasses-into-a-surveillance-nightmare

- https://idtechwire.com/metas-smart-glasses-to-add-facial-recognition-despite-privacy-concerns/

- https://reason.com/2024/10/16/glasses-equipped-with-facial-recognition-are-in-our-future/

- https://www.youtube.com/watch?v=2bQc1kNHtfg

- https://ajuronline.org/uploads/Volume_15_4/AJUR_Vol_15_Issue_4_p23.pdf

- https://www.youtube.com/watch?v=Zl7pqJlzzuI

- https://kustomsignals.com/blog/smart-glasses-the-latest-police-weapon-against-crime

- https://arxiv.org/html/2405.12786v1

- https://users.ece.cmu.edu/~lbauer/papers/2016/ccs2016-face-recognition.pdf

- https://www.turing.ac.uk/sites/default/files/2023-05/attacks_against_facial_recognition_systems_technical_briefing_final_copyedit.pdf

- https://keyless.io/blog/post/facial-recognition-applications-benefits-and-challenges

- https://surface.syr.edu/cgi/viewcontent.cgi?article=2479&context=honors_capstone

- https://dl.acm.org/doi/full/10.1145/3491199

- https://pmc.ncbi.nlm.nih.gov/articles/PMC8320316/

- https://pubmed.ncbi.nlm.nih.gov/37749176/

- https://www.nature.com/articles/s41598-023-43135-5

- https://research.aimultiple.com/facial-recognition-data-collection/

- https://petsymposium.org/popets/2023/popets-2023-0117.pdf

- https://pmc.ncbi.nlm.nih.gov/articles/PMC9371031/

- https://ietresearch.onlinelibrary.wiley.com/doi/full/10.1049/bme2.12004

- https://digitalcommons.lindenwood.edu/cgi/viewcontent.cgi?article=1213&context=psych_journals

- https://support.microsoft.com/en-us/topic/kb5005478-windows-hello-cve-2021-34466-6ef266bb-c68a-4083-aed6-31d7d9ec390e

- https://cybersecurityventures.com/privacy-pros-on-ray-bans-smart-glasses/

- https://tidbits.com/2024/10/06/the-privacy-risks-of-facial-recognition-in-smart-glasses/

- https://publicsafety.ieee.org/topics/ethical-considerations-in-the-use-of-facial-recognition-for-public-safety/

- https://ogletree.com/insights-resources/blog-posts/illinois-federal-judge-finds-another-eyewear-virtual-try-on-class-action-is-exempt-under-bipas-healthcare-exemption/

- https://californiaemploymentlaw.foxrothschild.com/2025/03/articles/advice-counseling/smart-considerations-for-the-use-of-smart-glasses-at-work/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11183273/

- https://fam.state.gov/fam/09FAM/09FAM030306.html

- https://bipartisanpolicy.org/blog/frt-policy-terms-definitions/

- https://springsapps.com/knowledge/key-risks-and-benefits-of-face-recognition-technology

- https://www.aarp.org/personal-technology/facial-recognition-technology/

- https://www.briskinfosec.com/blogs/blogsdetail/The-security-and-privacy-risks-of-face-recognition-authentication

- https://www.fraud.com/post/facial-recognition-software

- https://flamingltd.com/smart-glasses-face-recognition-personal-data-concerns/