Automated detection of CSAM makes me shudder.

Picture this: scanning your photos while pretending to protect us in a world that’s supposed to be encrypted. Irony at its best, right? I once found myself staring at a privacy policy, questioning whether I was the paranoid one.

High false positives? Yikes! I can’t be the only one who fears my innocent cat pics will trigger a red flag.

And let’s be honest, what’s worse than being surveilled in the name of safety? It’s confusing, it’s scary, and it’s a slippery slope.

Is chasing security worth our freedom?

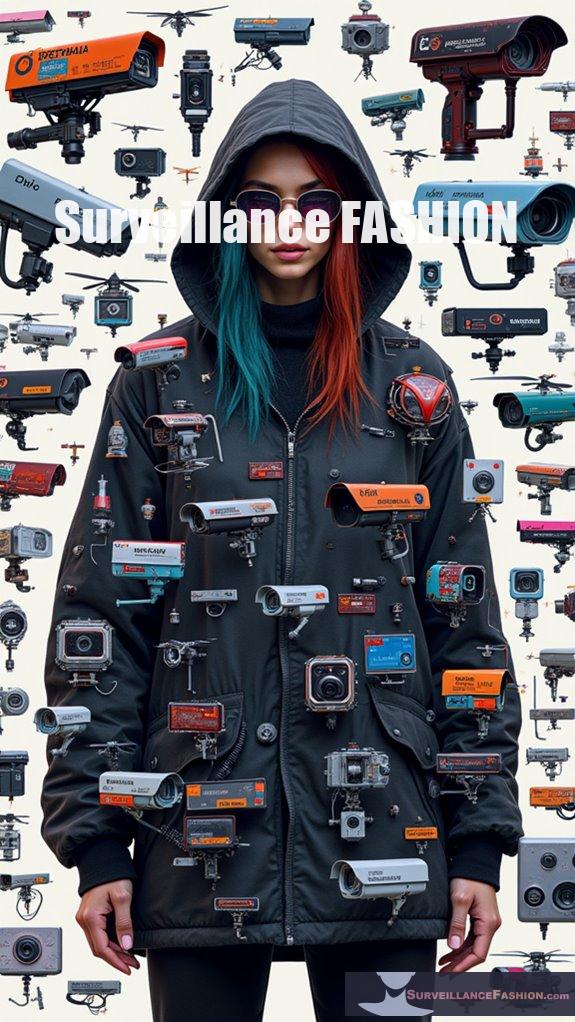

Anti-Surveillance Fashion: A Personal Journey into Privacy

I remember my first experience with anti-surveillance fashion. It was a quirky black hoodie I found at a flea market. Little did I know that its design was intentional—made to confuse facial recognition technology.

Every time I put it on, I felt a rush of rebellion against prying eyes. I wore it to a public gathering, and you wouldn’t believe the sideways glances I received.

Discomfort mingled with empowerment. I was suddenly part of a movement that champions privacy. Terms like “data protection” and “anonymity” took on new meaning. I’ve since embraced clothing that communicates my desire for freedom, merging style with a statement. Who knew fashion could be a silent protest?

Quick Takeaways

- Automated CSAM detection undermines user privacy by scanning content pre-encryption, risking exposure to unauthorized surveillance and data misuse.

- High rates of false positives lead to wrongful scrutiny and distress for innocent users, raising serious concerns about detection accuracy and privacy intrusions.

- Surveillance risks associated with automated systems may lead to constitutional rights violations and erode public trust in secure messaging platforms.

- The potential repurposing of detection tools for intrusive surveillance creates a significant risk of compromising personal freedoms without explicit user consent.

- The ongoing dialogue about balancing safety and privacy is crucial to ensure oversight in automated processes and protect civil liberties in digital interactions.

Client-Side Scanning in End-to-End Encryption

Client-side scanning (CSS) in the framework of end-to-end encryption (E2EE) has emerged as a contentious solution to combat the dissemination of Child Sexual Abuse Material (CSAM), particularly as regulatory pressures mount globally.

In practice, CSS inspects content like images and videos before encryption, creating significant client-side implications regarding user privacy and data integrity. This method generates digital fingerprints that risk exposing your data, making it susceptible to unauthorized scanning software and potential hacks exploiting encryption vulnerabilities. Client-side scanning checks messages against a database of hashes before sending, which poses further risks to user privacy. Moreover, the implementation of CSS could inadvertently lead to increased surveillance practices that could threaten the freedom of expression online.

Contrary to the assurances of E2EE, CSS introduces a backdoor-like access point, undermining the promise of total confidentiality. This systemic fragility could lead to misunderstandings, like unwarranted reporting of innocent content, thereby eroding trust in secure messaging platforms that many, including creators like us at Surveillance Fashion, care deeply about preserving.

Potential Abuse and Scope Creep

As automated systems designed to detect Child Sexual Abuse Material (CSAM) continue to evolve, the potential for their abuse by both state and private actors emerges as a critical concern, particularly when one considers the delicate balance between safeguarding children and upholding individual privacy rights.

- The tools, initially purposed for child safety, risk being repurposed for political or social surveillance.

- With expanding detections, we face normalization of intrusive surveillance.

- This function creep may compromise our intimacy and personal freedoms without our explicit consent. Hash-based detection technology enables rapid identification, raising concerns about its potential misuse.

Given the abuse potential inherent in these systems and the trend toward surveillance normalization, striking a balance becomes imperative.

Independent audits and clear legal boundaries protect our rights while ensuring the original mission of safeguarding children remains intact.

High False Positive Rates and Privacy Intrusions

The promise of automated CSAM detection tools, while initially designed to protect children, reveals a darker side through the interplay of high false positive rates and significant privacy intrusions. Instances like Google mistakenly flagging innocent family photos illustrate how these systems jeopardize digital privacy, leading to false allegations that invade personal spaces. With a staggering 59% of flagged content in the EU not being illegal, it’s clear that the accuracy of detection remains questionable. The extensive scanning of private communications raises profound concerns about constitutional rights, especially with alarming inaccuracies triggering unwarranted scrutiny. These issues are compounded by the fact that two fathers were mistakenly flagged for having child abuse images due to benign reasons, highlighting the potential for real harm. As we navigate the complexities of surveillance technology, the aim of Surveillance Fashion is to shed light on these critical issues and advocate for your right to a secure, private digital existence.

Technical and Legal Complexity

Maneuvering the labyrinth of technical and legal complexities surrounding automated CSAM detection presents a formidable challenge, particularly as you consider the interplay between legal barriers and the technical hurdles faced by developers.

- Limited access to CSAM for training hinders machine learning model effectiveness.

- Diverging legal frameworks create confusion about what constitutes illegal content.

- The hyper-realistic nature of AI-generated CSAM complicates detection efforts.

With each jurisdiction imposing its own legal standards, the development of robust classifiers becomes even more complicated.

Developers wrestle with the ethical implications of training on traumatic material, while also grappling with the uncertainties surrounding digital evidence admissibility.

This complex web of laws and technology underscores the urgent need for clearer legal guidelines and innovative detection solutions, such as those we explore at Surveillance Fashion.

Triage and Human Oversight Challenges

Maneuvering the intricacies of triage and human oversight in automated CSAM detection systems poses significant challenges, particularly when considering the copious volume of alerts generated by these technologies.

The demand for triage efficiency becomes paramount, as human reviewers grapple with overwhelming backlogs and misprioritized alerts, which can lead to delayed victim support and risk overlooking critical cases.

Amidst this chaos, you must acknowledge the mental toll on human wellbeing, as reviewers frequently confront traumatic content without adequate psychological safeguards.

Ethical implications emerge as well; the necessity for human oversight often contradicts privacy concerns, where innocent users can be unjustly flagged.

Balancing the urgency of triage with the need for respectful handling of sensitive materials is a complex, yet essential endeavor.

Risks of Global Surveillance and Mass Monitoring

While you may approach the subject of automated CSAM detection with an understanding of its noble intent—protecting children from exploitation—it’s essential to recognize the accompanying environment of global surveillance that unfolds.

The risks inherent in automated monitoring can’t be overlooked:

- Automated detection relies on sweeping data collection, widening surveillance nets globally.

- Mass monitoring can inadvertently compromise your privacy, cataloging innocuous interactions alongside harmful content.

- Global privacy laws vary, creating regulatory chaos that can empower misuse.

As multinational platforms enforce these systems, your data may become a pawn, subjected to complex geopolitical games where cultural attitudes towards privacy clash dangerously.

Moreover, dazzle makeup techniques can serve as a personal defense against facial recognition, emphasizing the need for innovative privacy solutions in this age of surveillance.

Reflecting on this dynamic is vital; it empowers you to advocate for a more balanced approach between child protection and your personal liberties, echoing the mission behind Surveillance Fashion.

Automated Data Monitoring System

The initiative to combat Child Sexual Abuse Material (CSAM) through automated data monitoring systems represents a significant technological response to a deeply entrenched societal problem, prompting both admiration and scrutiny in equal measure.

Surveillance Through Wearable Technology

Given that advancements in wearable technology continuously reshape the terrain of personal privacy, the implications of such devices extend far beyond mere health tracking.

The allure of wearables comes with significant risks of:

- Wearable surveillance that continuously monitors your activities and biometric data.

- A growing privacy erosion, as extensive data is often shared without your clear consent.

- Increased vulnerability to data breaches, exposing sensitive health information.

While the promise of convenience may be tempting, it doesn’t negate the ethical dilemmas posed by unauthorized data sharing or the normalization of continuous monitoring. Furthermore, the rise of modern surveillance tools has increased public concern regarding how data is collected, stored, and utilized.

As we explore wearable technology on platforms like Surveillance Fashion, it’s critical to scrutinize how your data is collected and used, allowing you to question the true cost of convenience.

EU Chat Control Child Sexual Abuse Material Detection

As the European Union proposes its controversial Chat Control initiative aimed at detecting child sexual abuse material (CSAM) across various messaging platforms, including encrypted services like WhatsApp and Signal, users find themselves facing profound implications for their privacy and security.

| Concerns | Implications |

|---|---|

| Mandatory scanning | Erosion of digital privacy |

| High false positive rates | Unreliable detection of CSAM |

| Weak encryption | Increased risk of exploitation |

| Client-side scanning | Mass surveillance without oversight |

| Undermined public trust | Potential decline in secure usage |

The initiative mandates automatic scanning, weakening digital infrastructure. Many citizens voice concerns, recognizing that such measures prioritize surveillance over personal liberty, raising fundamental questions about the trade-off between safety and privacy that we often explore at Surveillance Fashion.

EU Chat Control Proposal Risks and Anti-Surveillance Strategies Ebook review

While many citizens might feel a sense of alarm regarding the EU Chat Control proposal, which mandates intrusive scanning of private digital communications, it’s essential to unpack the layers of risk that such regulatory actions entail.

The proposal raises significant concerns related to:

- Privacy violations: Breaking end-to-end encryption undermines data sovereignty.

- AI Ethics: Deploying opaque AI for surveillance can exacerbate biases.

- Surveillance creep: Initial CSAM detection could expand unlawfully to monitor personal data.

As you explore the EU Chat Proposal eBook discussing these issues, consider the implications of granting authorities excessive surveillance powers.

The merger of technology and civil liberties must be approached with caution, ensuring that protection mechanisms evolve alongside such measures.

This dialogue is vital as we reflect on the essence of freedom in our digital interactions.

EU Chat Control FAQ

How Do Automated CSAM Detection Systems Impact User Privacy Rights?

Automated CSAM detection systems fundamentally challenge your privacy rights by necessitating user consent and demanding data transparency throughout their processes.

As these systems scan your uploaded content, often without clear permission, they raise concerns about potential overreach and unauthorized surveillance. For instance, while hash-based detection may identify known CSAM effectively, the accompanying lack of transparency diminishes trust.

This uncertainty can lead to hesitance in sharing legitimate content, creating a restrictive digital environment that complicates participatory online experiences.

What Are the Alternatives to Automated CSAM Detection Technology?

While automated CSAM detection is often hailed as a digital savior, alternative strategies like manual review and encryption techniques provide subtle solutions.

You may engage trained professionals who manually assess flagged content, mitigating the shortcomings of algorithms.

Meanwhile, employing advanced encryption techniques not only enhances user privacy but also complicates illicit content distribution.

These methods collectively promote a more liberated approach to online safety, fostering a balanced environment amid the technological scenery.

How Can Users Protect Their Devices From Intrusive Scanning?

To protect your devices from intrusive scanning, implement robust device encryption, ensuring that unauthorized access remains unattainable, while being mindful of user consent regarding data handling.

Regularly review privacy settings on your apps, limiting permissions that could facilitate scanning. Choose services that prioritize end-to-end encryption, like Signal for messaging, which shields your content from surveillance.

Engaging regularly with communities focused on digital rights further equips you with knowledge to fend off emerging threats effectively.

What Role Do Governments Play in Regulating CSAM Detection?

Governments play an essential role in regulating CSAM detection through the establishment of extensive policies aimed at prevention, detection, and prosecution of offenses.

Effective regulation requires creating frameworks that balance the protection of children with individual privacy rights. For instance, recent legislation like the STOP CSAM Act mandates transparency from tech companies, while facilitating data access for law enforcement to combat these crimes effectively.

Your awareness of these policies is important in managing the digital environment responsibly.

How Can Individuals Report Privacy Violations Related to CSAM Detection?

To report privacy violations related to CSAM detection, utilize established reporting mechanisms on platforms, such as their privacy or trust and safety teams.

You should document specific details, including content and nature of your concern. Engaging with privacy advocacy groups like NCMEC can provide additional avenues for raising issues.

Recall, platforms often have appeal processes for enforcement actions, offering you a chance to challenge any erroneous claims linked to your reports.

Summary

As the terrain of automated CSAM detection evolves, the tension between privacy and safety looms larger than ever. You’re left contemplating the implications of client-side scanning, with the risk of false positives infringing upon personal liberties. The complex web of technology intertwines with legal overload, posing questions about surveillance’s safeguards. With the EU’s proposals inviting scrutiny, the path ahead requires vigilant awareness—what might be sacrificed in the name of security? The answer awaits, raising fundamental ethical concerns.

References

- https://academic.oup.com/cybersecurity/article/10/1/tyad020/7590463

- https://www.aic.gov.au/sites/default/files/2024-10/ti699_benefits_and_risks_of_implementing_cloud-based_technology_for_csa_investigations.pdf

- https://www.apple.com/child-safety/pdf/Security_Threat_Model_Review_of_Apple_Child_Safety_Features.pdf

- https://www.unitary.ai/articles/the-present-and-future-of-detecting-child-sexual-abuse-material-on-social-media

- https://pubmed.ncbi.nlm.nih.gov/39917441/

- https://www.eff.org/deeplinks/2019/11/why-adding-client-side-scanning-breaks-end-end-encryption

- https://www.internetsociety.org/resources/doc/2024/preemptive-monitoring-e2ee-services/

- https://www.internetsociety.org/resources/doc/2023/client-side-scanning/

- https://proton.me/blog/why-client-side-scanning-isnt-the-answer

- https://www.accessnow.org/why-client-side-scanning-is-lose-lose-proposition/

- https://www.aic.gov.au/sites/default/files/2025-01/ti711_artificial_intelligence_and_child_sexual_abuse.pdf

- https://technologycoalition.org/resources/update-on-voluntary-detection-of-csam/

- https://www.thorn.org/blog/insights-from-2023-cybertipline-report/

- https://www.missingkids.org/gethelpnow/cybertipline/cybertiplinedata

- https://edri.org/wp-content/uploads/2023/08/EDRi-fact-checking-of-top-9-claims-on-CSA-Regulation.pdf

- https://eurochild.org/uploads/2023/10/ECLAG-Myth-Busting-Facts-on-EU-CSA-Regulation.pdf

- https://www.eff.org/deeplinks/2022/08/googles-scans-private-photos-led-false-accusations-child-abuse

- https://www.microsoft.com/en-us/research/publication/metadata-based-detection-of-child-sexual-abuse-material-2/

- https://www.magnetforensics.com/blog/the-challenge-of-authenticating-media-in-the-age-of-ai-generated-csam/

- https://ceur-ws.org/Vol-3978/regular-s4-01.pdf

- https://sibermate.com/hrmi/ai-and-csam-emerge-as-new-challenges-in-cybercrime

- https://trilateralresearch.com/safeguarding/from-detection-to-education-five-ways-ai-is-combatting-child-exploitation

- https://www.trmlabs.com/resources/blog/the-rise-of-ai-enabled-crime-exploring-the-evolution-risks-and-responses-to-ai-powered-criminal-enterprises

- https://pmc.ncbi.nlm.nih.gov/articles/PMC11794313/

- https://www.childlight.org/uploads/publications/into-the-light.pdf

- https://www.europarl.europa.eu/RegData/etudes/BRIE/2020/659360/EPRS_BRI(2020)659360_EN.pdf

- https://ecpat.org/wp-content/uploads/2021/05/TOWARDS-A-GLOBAL-INDICATOR-ON-UNIDENTIFIED-VICTIMS-IN-CHILD-SEXUAL-EXPLOITATION-MATERIAL-Summary-Report.pdf

- https://www.thorn.org/about/our-impact/2024-impact-report/

- https://www.projectarachnid.ca/en/

- https://blog.google/technology/safety-security/how-we-detect-remove-and-report-child-sexual-abuse-material/

Leave a Reply